This is the fourth blog post of a series on using Oracle Text, Oracle R Enterprise and Oracle Data Mining. Make sure to check out the previous blog posts as each one builds upon each other.

In this blog post, I will have an initial look at how you can use Oracle Text to perform document classification. In my next blog post, in the series, I will look at how you can use Oracle Data Mining with Oracle Text to perform classification.

The area of document classification using Oracle Text is a well trodden field and there are lots and lots of material out there to assist you. This blog post will look at the core steps you need to follow and how Oracle Text can help you with classifying your documents or text objects in a table.

When you use Oracle Text for documentation classification the simplest approach is to use 'Rule-based Classification'. With this approach you will defined a set of rules, when applied to the document will determine classification that will be assigned to the document.

There is a little bit of setup and configuration needed to make this happen. This includes the following.

- Create a table that will store you document. See my previous blog posts in the series to see an example of one that is used to store the text from webpages.

- Create a rules table. This will contain the classification label and then a set of rules that will be used by Oracle Text to determine that classification to assign to the document. These are in the format similar to what you might see in the WHERE clause of a SELECT statement. You will need follow the rules and syntax of CTXRULES to make sure your rules fire correctly.

- Create a CTXRULE index on the rules table you created in the previous step.

- Create a table that will be a link table between the table that contains your documents and the table that contains your categories.

When you have these steps completed you can now start classifying your documents. The following example illustrates using these steps using the text documents I setup in my previous blog posts.

Here is the structure of my documents table. I had also created an Oracle Text CTXSYS.CONTEXT index on the DOC_TEXT attribute.

create table MY_DOCUMENTS (

doc_pk NUMBER(10) PRIMARY KEY,

doc_title VARCHAR2(100),

doc_extracted DATE,

data_source VARCHAR2(200),

doc_text CLOB );

The next step is to create a table that contains our categories and rules. The structure of this table is very simple, and the following is an example.

create table DOCUMENT_CATEGORIES (

doc_cat_pk NUMBER(10) PRIMARY KEY,

doc_category VARCHAR2(40),

doc_cat_query VARCHAR2(2000) );

create sequence doc_cat_seq;

Now we can create the table that will store the identified document categories/classifications for each of out documents. This is a link table that contains the primary keys from the MY_DOCUMENTS and the MY_DOCUMENT_CATEGORIES tables.

create table MY_DOC_CAT (

doc_pk NUMBER(10),

doc_cat_pk NUMBER(10) );

Queries for CTXRULE are similar to those of CONTAINS queries. Basic phrasing within quotes is supported, as are the following CONTAINS operators: ABOUT, AND, NEAR, NOT, OR, STEM, WITHIN, and THESAURUS. The following statements contain my rules.

insert into document_categories values

(doc_cat_seq.nextval, 'OAA','Oracle Advanced Analytics');

insert into document_categories values

(doc_cat_seq.nextval, 'Oracle Data Mining','ODM or Oracle Data Mining');

insert into document_categories values

(doc_cat_seq.nextval, 'Oracle Data Miner','ODMr or Oracle Data Miner or SQL Developer');

insert into document_categories values

(doc_cat_seq.nextval, 'R Technologies','Oracle R Enterprise or ROacle or ORAACH or R');

We are now ready to create the Oracle Text CTXRULE index.

create index doc_cat_idx on document_categories(doc_cat_query) indextype is ctxsys.ctxrule;

Our next step is to apply the rules and to generate the categories/classifications. We have two scenarios to deal with here. The first is how do we do this for our existing records and the second to how can you do this ongoing as new documents get loaded into the MY_DOCUMENTS table.

For the first scenario, where the documents already exist in our table, we can can use a procedure, just like the following.

DECLARE

v_document MY_DOCUMENTS.DOC_TEXT%TYPE;

v_doc MY_DOCUMENTS.DOC_PK%TYPE;

BEGIN

for doc in (select doc_pk, doc_text from my_documents) loop

v_document := doc.doc_text;

v_doc := doc.doc_pk;

for c in (select doc_cat_pk from document_categories

where matches(doc_cat_query, v_document) > 0 )

loop

insert into my_doc_cat values (doc.doc_pk, c.doc_cat_pk);

end loop;

end loop;

END;

/

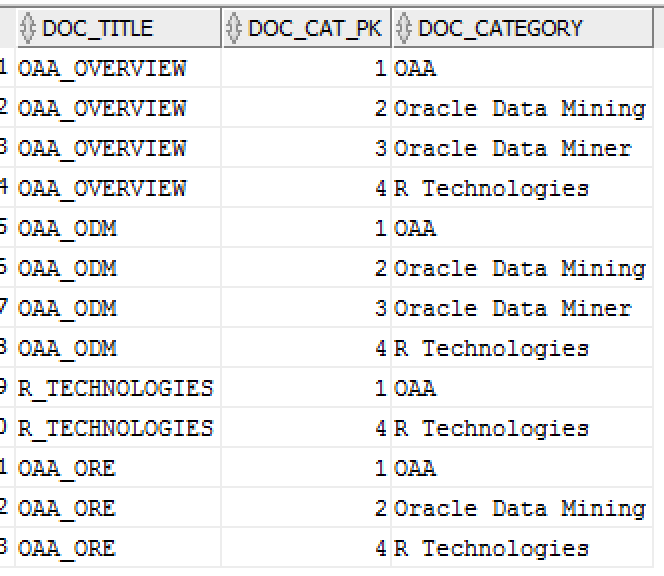

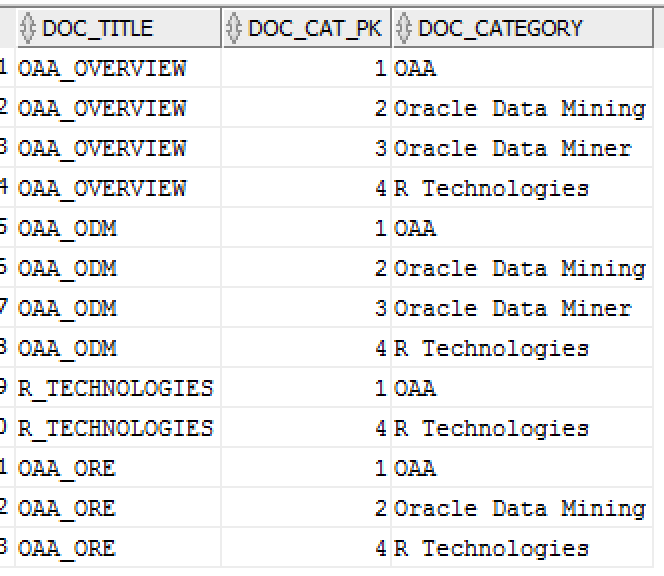

Let us have a look at the categories/classifications that were generated.

select a.doc_title, c.doc_cat_pk, b.doc_category

from my_documents a,

document_categories b,

my_doc_cat c

where a.doc_pk = c.doc_pk

and c.doc_cat_pk = b.doc_cat_pk

order by a.doc_pk, c.doc_cat_pk;

We can see the the categorisation/classification actually gives us the results we would have expected of these documents/web pages.

Now we can look at how to generate these these categories/classifications on an on going basis. For this we will need a database trigger on the MY_DOCUMENTS table. Something like the following should do the trick.

CREATE or REPLACE TRIGGER t_cat_doc

before insert on MY_DOCUMENTS

for each row

BEGIN

for c in (select doc_cat_pk from document_categories

where matches(doc_cat_query, :new.doc_text)>0)

loop

insert into my_doc_cat values (:new.doc_pk, c.doc_cat_pk);

end loop;

END;

At this point we have now worked through how to build and use Oracle Text to perform Rule based document categorisation/classification.

In addition to this type of classification, Oracle Text also has uses some machine learning algorithms to classify documents. These include using Decision Trees, Support Vector Machines and Clustering. It is important to note that these are not the machine learning algorithms that come as part of Oracle Data Mining. Look out of my other blog posts that cover these topics.

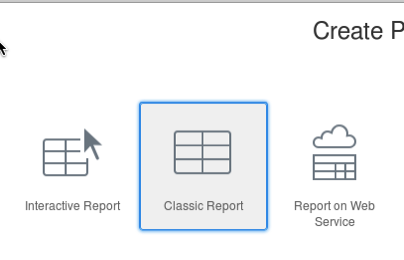

Enter a title for the report, and accept the default settings

Enter a title for the report, and accept the default settings

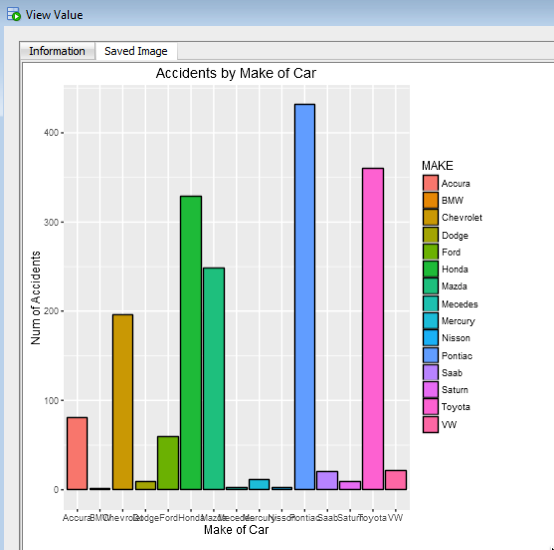

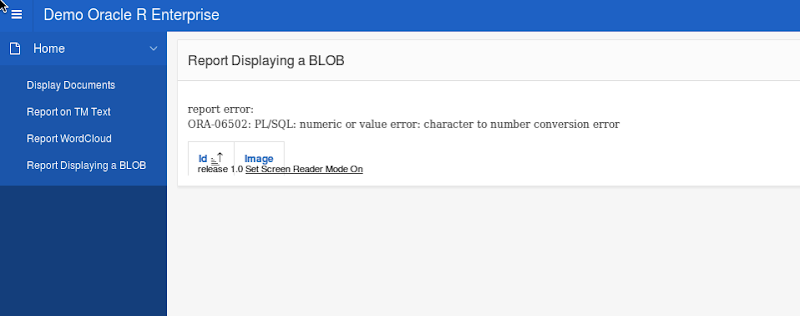

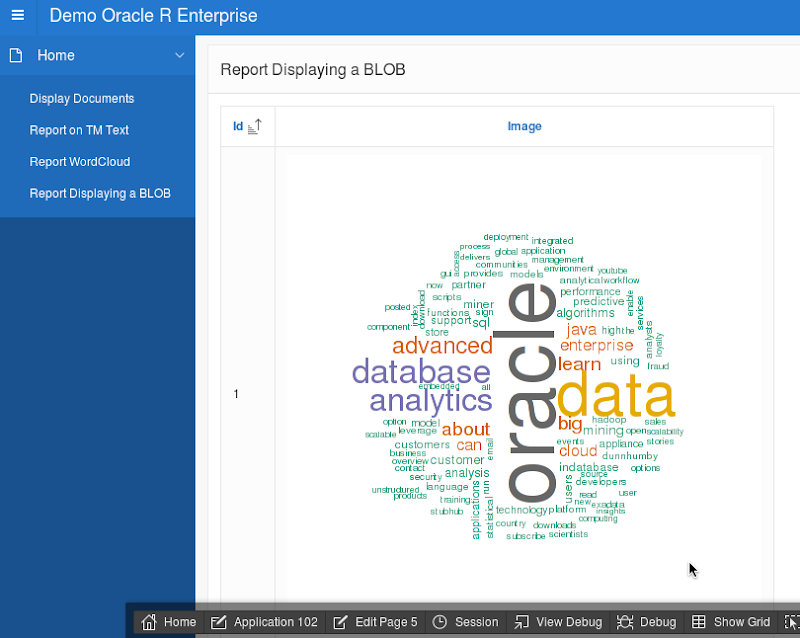

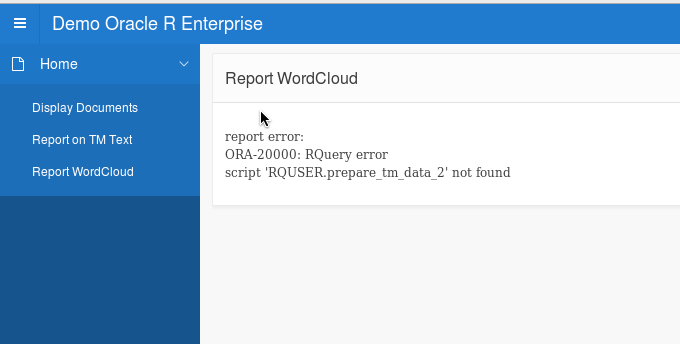

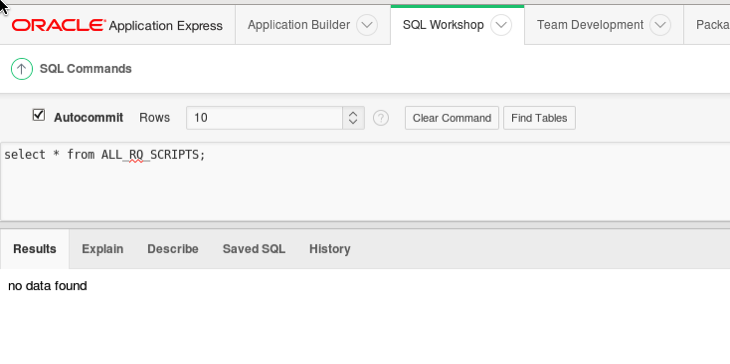

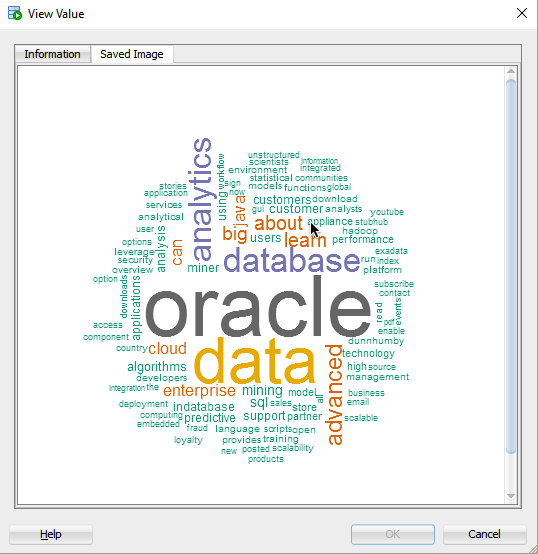

In this blog post I showed you how you use Oracle R Enterprise and the embedded R execution features of ORE to use the text from the webpages and to create a word cloud. This is a useful tool to be able to see visually what words can stand out most on your webpage and if the correct message is being put across to your customers.

In this blog post I showed you how you use Oracle R Enterprise and the embedded R execution features of ORE to use the text from the webpages and to create a word cloud. This is a useful tool to be able to see visually what words can stand out most on your webpage and if the correct message is being put across to your customers.