As 2016 draws to a close I like to look back at what I have achieved over the year. Most of the following achievements are based on my work with the Oracle User Group community. I have some other achievements are are related to the day jobs (Yes I have multiple day jobs), but I won't go into those here.

As you can see from the following 2016 was another busy year. There was lots of writing, which I really enjoy and I'll be continuing with in 2017. As they say, watch this space for writing news in 2017.

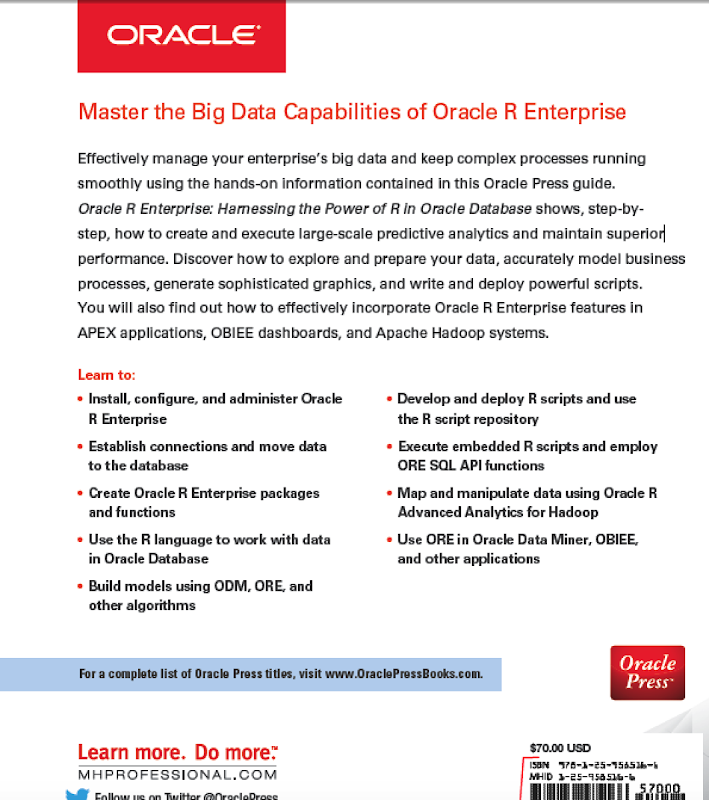

BooksYes 2016 was a busy year for writing and most of the later half of 2015 and the first half of 2016 was taken up writing two books. Yes two books. One of the books was on Oracle R Enterprise and this book compliments my previous published book on Oracle Data Mining. I now have the books that cover both components of the Oracle Advanced Analytics Option.

I also co-wrote a book with legends of Oracle community. These were Arup Nada, Martin Widlake, Heli Helskyaho and Alex Nuijten.

More news coming in 2017.

Blog Posts

One of the things I really enjoy doing is playing with various features of Oracle and then writing some blog posts about them. When writing the books I had to cut back on writing blog posts. I was luck to be part of the 12.2 Database beta this year and over the past few weeks I've been playing with 12.2 in the cloud. I've already written a blog post or two already on this and I also have an OTN article on this coming out soon. There will be more 12.2 analytics related blog posts in 2017.

In 2016 I have written 55 blog posts (including this one). This number is a little bit less when compared with previous years. I'll blame the book writing for this. But more posts are in the works for 2017.

Articles

In 2016 I've written articles for OTN and for Toad World. These included:

OTN- Oracle Advanced Analytics : Kicking the Tires/Tyres

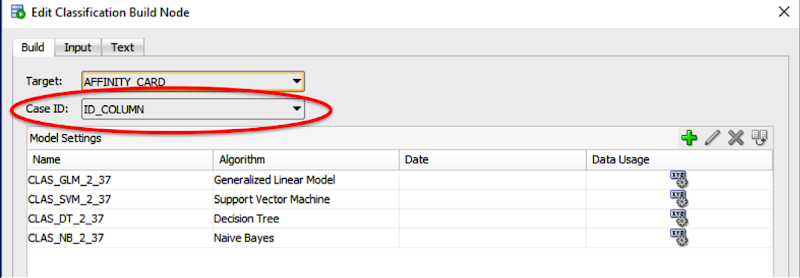

- Kicking the Tyres of Oracle Advanced Analytics Option - Using SQL and PL/SQL to Build an Oracle Data Mining Classification Model

- Kicking the Tyres of Oracle Advanced Analytics Option - Overview of Oracle Data Miner and Build your First Workflow

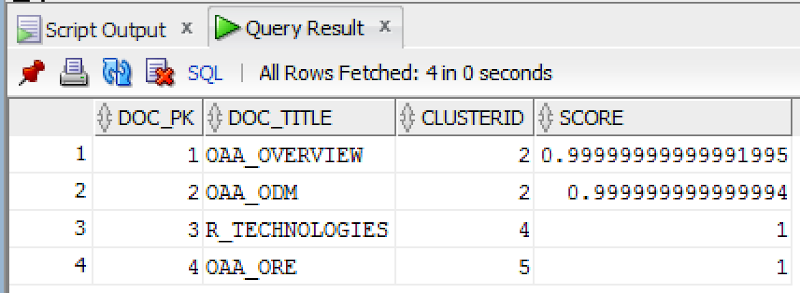

- Kicking the Tyres of Oracle Advanced Analytics Option - Using SQL to score/label new data using Oracle Data Mining Models

- Setting up and configuring RStudio on the Oracle 12.2 Database Cloud Service

- Introduction to Oracle R Enterprise

- ORE 1.5 - User Defined R Scripts

Conferences

- January - Yes SQL Summit, NoCOUG Winter Conference, Redwood City, CA, USA **

- January - BIWA Summit, Oracle HQ, Redwood City, CA, USA **

- March - OUG Ireland, Dublin, Ireland

- June - KScope, Chicago, USA (3 presentations)

- September - Oracle Open World (part of EMEA ACEs session) **

- December - UKOUG Tech16 & APPs16

** for these conferences the Oracle ACE Director programme funded the flights and hotels. All other expenses and other conferences I paid for out of my own pocket.

OUG Activities

I'm involved in many different roles in the user group. The UKOUG also covers Ireland (incorporating OUG Ireland), and my activities within the UKOUG included the following during 2016:

- Editor of Oracle Scene: We produced 4 editions in 2016. Thank you to all who contributed and wrote articles.

- Created the OUG Ireland Meetup. We had our first meeting in October. Our next meetup will be in January.

- OUG Ireland Committee member of TECH SIG and BI & BA SIG.

- Committee member of the OUG Ireland 2 day Conference 2016.

- Committee member of the OUG Ireland conference 2017.

- KScope17 committee member for the Data Visualization & Advanced Analytics track.

I'm sure I've forgotten a few things, I usually do. But it gives you a taste of some of what I got up to in 2016.

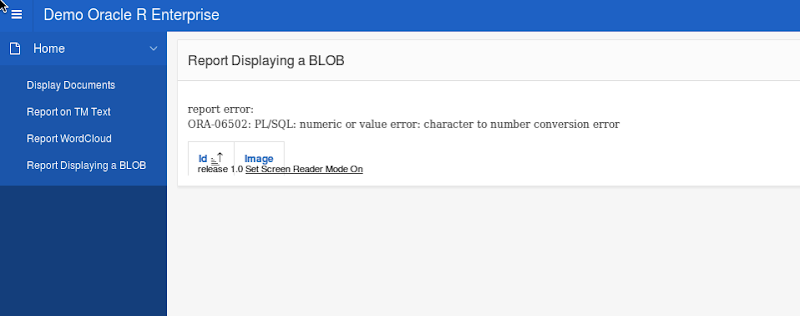

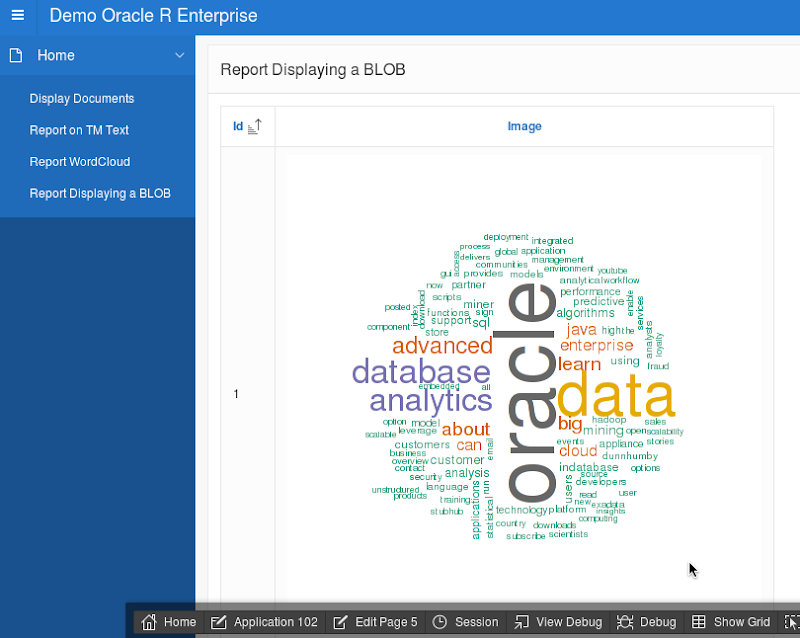

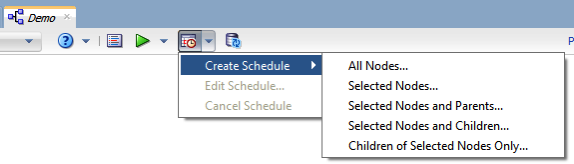

Enter a title for the report, and accept the default settings

Enter a title for the report, and accept the default settings