A project that I've been working on for a while now involves the use of Oracle Text, Oracle R Enterprise and Oracle Data Mining. Oracle Text comes with your Oracle Database licence. Oracle R Enterprise and Oracle Data Mining are part of the Oracle Advanced Analytics (extra cost) option.

What I will be doing over the course of 4 or maybe 5 blog posts is how these products can work together to help you gain a grater insight into your data, and part of your data being large text items like free format text, documents (in various forms e.g. html, xml, pdf, ms word), etc.

Unfortunately I cannot show you examples from the actual project I've been working on (and still am, from time to time). But what I can do is to show you how products and components can work together.

In this blog post I will just do some data setup. As with all project scenarios there can be many ways of performing the same tasks. Some might be better than others. But what I will be showing you is for demonstration purposes.

The scenario: The scenario for this blog post is that I want to extract text from some webpages and store them in a table in my schema. I then want to use Oracle Text to search the text from these webpages.

Schema setup: We need to create a table that will store the text from the webpages. We also want to create an Oracle Text index so that this text is searchable.

drop sequence my_doc_seq;

create sequence my_doc_seq;

drop table my_documents;

create table my_documents (

doc_pk number(10) primary key,

doc_title varchar2(100),

doc_extracted date,

data_source varchar2(200),

doc_text clob);

create index my_documents_ot_idx on my_documents(doc_text)

indextype is CTXSYS.CONTEXT;

In the table we have a number of descriptive attributes and then a club for storing the website text. We will only be storing the website text and not the html document (More on that later). In order to make the website text searchable in the DOC_TEXT attribute we need to create an Oracle Text index of type CONTEXT.

There are a few challenges with using this type of index. For example when you insert a new record or update the DOC_TEXT attribute, the new values/text will not be reflected instantly, just like we are use to with traditional indexes. Instead you have to decide when you want to index to be updated. For example, if you would like the index to be updated after each commit then you can create the index using the following.

create index my_documents_ot_idx on my_documents(doc_text)

indextype is CTXSYS.CONTEXT

parameters ('sync (on commit)');

Depending on the number of documents you have being committed to the DB, this might not be for you. You need to find the balance. Alternatively you could schedule the index to be updated by passing an interval to the 'sync' in the above command. Alternatively you might want to use DBMS_JOB to schedule the update.

To manually sync (or via DBMS_JOB) the index, assuming we used the first 'create index' statement, we would need to run the following.

EXEC CTX_DDL.SYNC_INDEX('my_documents_ot_idx');

This function just adds the new documents to the index. This can, over time, lead to some fragmentation of the index, and will require it to the re-organised on a semi-regular basis. Perhaps you can schedule this to happen every night, or once a week, or whatever makes sense to you.

BEGIN

CTX_DDL.OPTIMIZE_INDEX('my_documents_ot_idx','FULL');

END;

(I could talk a lot more about setting up some basics of Oracle Text, the indexes, etc. But I'll leave that for another day or you can read some of the many blog posts that already exist on the topic.)

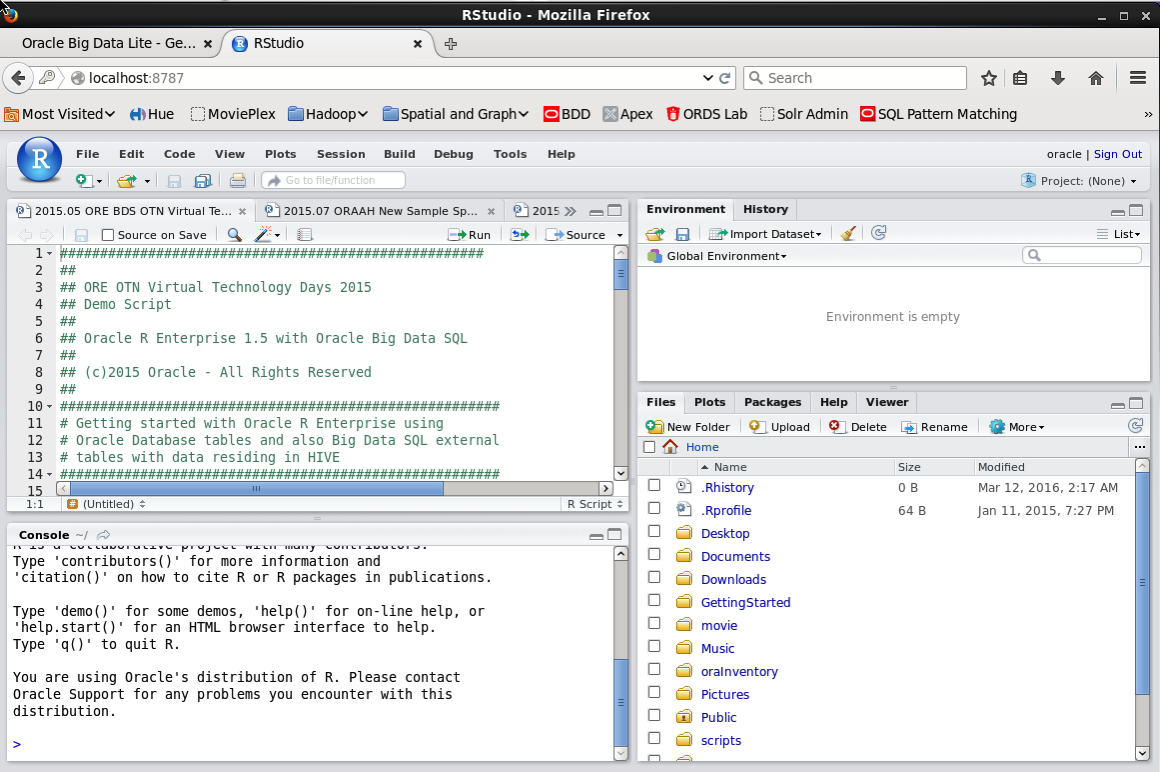

Extracting text from a webpage using R: Some time ago I wrote a blog post on using some of the text mining features and packages in R to produce a word cloud based on some of the Oracle Advanced Analytics webpages.

I'm going to use the same webpages and some of the same code/functions/packages here.

The first task you need to do is to get your hands on the 'htmlToText function.

You can download the htmlToText function on github. This function requires the 'Curl' and 'XML' R packages. So you may need to install these.

I also use the str_replace_all function ("stringer' R package) to remove some of the html that remains, to remove some special quotes and to replace and occurrences of '&' with 'and'.

# Load the function and required R packages

source("c:/app/htmltotext.R")

library(stringr)

data1 <- str_replace_all(htmlToText("http://www.oracle.com/technetwork/database/options/advanced-analytics/overview/index.html"), "[\r\n\t\"\'\u201C\u201D]" , "")

data1 <- str_replace_all(data1, "&", "and")

data2 <- str_replace_all(str_replace_all(htmlToText("http://www.oracle.com/technetwork/database/options/advanced-analytics/odm/index.html"), "[\r\n\t\"\'\u201C\u201D]" , ""), "&", "and")

data2 <- str_replace_all(data2, "&", "and")

data3 <- str_replace_all(str_replace_all(htmlToText("http://www.oracle.com/technetwork/database/database-technologies/r/r-technologies/overview/index.html"), "[\r\n\t\"\'\u201C\u201D]" , ""), "&", "and")

data3 <- str_replace_all(data3, "&", "and")

data4 <- str_replace_all(str_replace_all(htmlToText("http://www.oracle.com/technetwork/database/database-technologies/r/r-enterprise/overview/index.html"), "[\r\n\t\"\'\u201C\u201D]" , ""), "&", "and")

data4 <- str_replace_all(data4, "&", "and")

We now have the text extracted and cleaned up.

Create a data frame to contain all our data: Now that we have the text extracted, we can prepare the other data items we need to insert the data into our table ('my_documents'). The first stept is to construct a data frame to contain all the data.

data_source = c("http://www.oracle.com/technetwork/database/options/advanced-analytics/overview/index.html",

"http://www.oracle.com/technetwork/database/options/advanced-analytics/odm/index.html",

"http://www.oracle.com/technetwork/database/database-technologies/r/r-technologies/overview/index.html",

"http://www.oracle.com/technetwork/database/database-technologies/r/r-enterprise/overview/index.html")

doc_title = c("OAA_OVERVIEW", "OAA_ODM", "R_TECHNOLOGIES", "OAA_ORE")

doc_extracted = Sys.Date()

data_text <- c(data1, data2, data3, data4)

my_docs <- data.frame(doc_title, doc_extracted, data_source, data_text)

Insert the data into our database table: With the data in our data fram (my_docs) we can now use this data to insert into our database table. There are a number of ways of doing this in R. What I'm going to show you here is how to do it using Oracle R Enterprise (ORE). The thing with ORE is that there is no explicit functionality for inserting and updating records in a database table. What you need to do is to construct, in my case, the insert statement and then use ore.exec to execute this statement in the database.

library(ORE)

ore.connect(user="ora_text", password="ora_text", host="localhost", service_name="PDB12C",

port=1521, all=TRUE)

for(i in 1:nrow(my_docs)) {

insert_stmt <- "BEGIN insert_tab_document ('"

insert_stmt <- paste(insert_stmt, my_docs[i,]$doc_title, sep="")

insert_stmt <- paste(insert_stmt, "', '", my_docs[i,]$doc_extracted, "'", sep="")

insert_stmt <- paste(insert_stmt, ", '", my_docs[i,]$data_source, sep="")

insert_stmt <- paste(insert_stmt, "', '", my_docs[i,]$data_text, "');", " END;", sep="")

ore.exec(insert_stmt)

}

ore.exec("commit")

You can now view the inserted webpage text using R or using SQL.

In my next blog post in this series, I will look at how you can use the ORE embedded features to read and process this data.

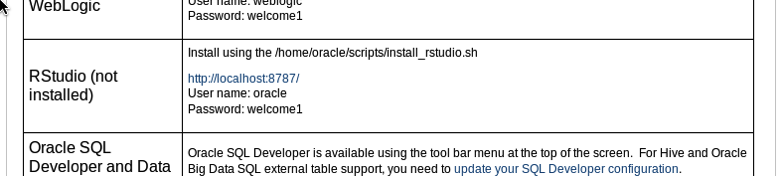

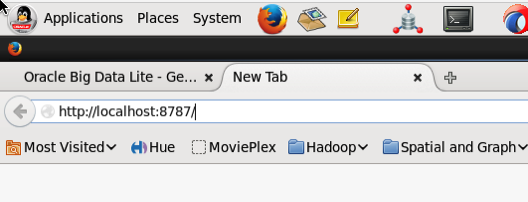

Log in using your Server username and password. This is oracle/oracle on the VM.

Log in using your Server username and password. This is oracle/oracle on the VM.