This is the third blog post of a series on using Oracle Text, Oracle R Enterprise and Oracle Data Mining. Check out the first and second blog posts of the series, as the data used in this blog post was extracted, processed and stored in a databases table.

This blog post is divided into 3 parts. The first part will build on what was covered in in the previous blog post and will expand the in-database ORE R script to include more data processing. The second part of this blog post will look at how you can use SQL to call our in-database ORE R scripts and to be able to include it in our custom applications, for example using APEX (part 3).

Part 1 - Expanding our in-database ORE R script for Text Mining

In my previous blog post we created an ORE user defined R script, that is stored in the database, and this script was used to perform text mining and to create a word cloud. But the data/text to be mined was processed beforehand and passed into this procedure.

But what if we wanted to have a scenario where we just wanted to say, here is the table that contains the data. Go ahead and process it. To do this we need to expand our user defined R script to include the loop to merge the webpage text into one variable. The following is a new version of our ORE user defined R script.

> ore.scriptCreate("prepare_tm_data_2", function (local_data) {

library(tm)

library(SnowballC)

library(wordcloud)

tm_data <-""

for(i in 1:nrow(local_data)) {

tm_data <- paste(tm_data, local_data[i,]$DOC_TEXT, sep=" ")

}

txt_corpus <- Corpus (VectorSource (tm_data))

# data clean up

tm_map <- tm_map (txt_corpus, stripWhitespace) # remove white space

tm_map <- tm_map (tm_map, removePunctuation) # remove punctuations

tm_map <- tm_map (tm_map, removeNumbers) # to remove numbers

tm_map <- tm_map (tm_map, removeWords, stopwords("english")) # to remove stop words

tm_map <- tm_map (tm_map, removeWords, c("work", "use", "java", "new", "support"))

# prepare matrix of words and frequency counts

Matrix <- TermDocumentMatrix(tm_map) # terms in rows

matrix_c <- as.matrix (Matrix)

freq <- sort (rowSums (matrix_c)) # frequency data

res <- data.frame(words=names(freq), freq)

wordcloud (res$words, res$freq, max.words=100, min.freq=3, scale=c(7,.5), random.order=FALSE, colors=brewer.pal(8, "Dark2"))

} )

To call this R scipts using the embedded R execution we can use the ore.tableApply function. Our parameter to our new R script will now be an ORE data frame. This can be a table in the database or we can create a subset of table and pass it as the parameter. This will mean all the data process will occur on the Oracle Database server. No data is passed to the client or processing performed on the client. All work is done on the database server. The only data that is passed back to the client is the result from the function and that is the word cloud image.

> res <- ore.tableApply(MY_DOCUMENTS, FUN.NAME="prepare_tm_data_2") > res

Part 2 - Using SQL to perform R Text Mining

Another way you ccan call this ORE user defined R function is using SQL. Yes we can use SQL to call R code and to produce an R graphic. Then doing this the R graphic will be returned as a BLOB. So that makes it easy to view and to include in your applications, just like APEX.

To call our ORE user defined R function, we can use the rqTableEval SQL function. You only really need to set two of the parameters to this function. The first parameter is a SELECT statement the defines the data set to be passed to the function. This is similar to what I showed above using the ore.tableApply R function, except we can have easier control on what records to pass in as the data set. The fourth parameter gives the name of the ORE user defined R script.

select *

from table(rqTableEval( cursor(select * from MY_DOCUMENTS),

null,

'PNG',

'prepare_tm_data_2'));

This is the image that is produced by this SQL statement and viewed in SQL Developer.

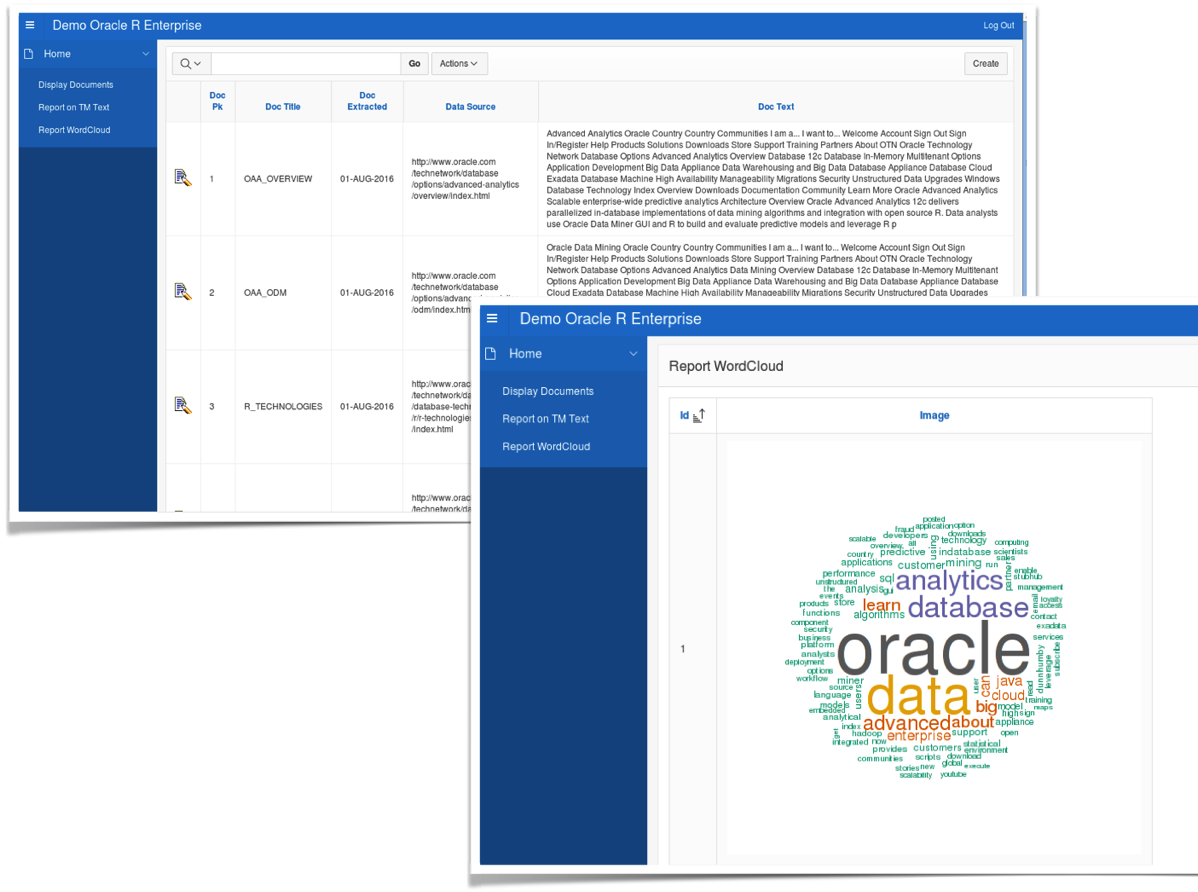

Part 3 - Adding our R Text Mining to APEX

Adding the SQL to call an ORE user defined script is very simple in APEX. You can create a form or a report based on a query, and this query can be the same query that is given above.

Something that I like to do is to create a view for the ORE SELECT statement. This gives me some flexibility with some potential future modifications. This could be as simple as just changing the name of the script. Also if I discover a new graphic that I want to use, all I need to do is to change the R code in my user defined R script and it will automatically be picked up and displayed in APEX. See the images below.

WARNING: Yes I do have a slight warning. Since the introduction of ORE 1.4 and higher there is a slightly different security model around the use of user defined R scripts. Instead of going into the details of this and what you need to do in this blog post, I will have a separate blog post that describes the behaviour and what you need to do allow APEX to use ORE and to call the user defined R scripts in your schema. So look out for this blog post coming really soon.

In this blog post I showed you how you use Oracle R Enterprise and the embedded R execution features of ORE to use the text from the webpages and to create a word cloud. This is a useful tool to be able to see visually what words can stand out most on your webpage and if the correct message is being put across to your customers.

In this blog post I showed you how you use Oracle R Enterprise and the embedded R execution features of ORE to use the text from the webpages and to create a word cloud. This is a useful tool to be able to see visually what words can stand out most on your webpage and if the correct message is being put across to your customers.

Thank you for sharing. This is great. And it has inspired me to do something similar.

ReplyDeleteIf it helps, I think you can optimize your code a bit. Maybe change..

ore.scriptCreate("prepare_tm_data_2", function (local_data) {

library(tm)

library(SnowballC)

library(wordcloud)

tm_data <-""

for(i in 1:nrow(local_data)) {

tm_data <- paste(tm_data, local_data[i,]$DOC_TEXT, sep=" ")

}

txt_corpus <- Corpus (VectorSource (tm_data))

into

ore.scriptCreate("prepare_tm_data_2", function (local_data) {

library(tm)

library(SnowballC)

library(wordcloud)

# tm_data <-""

# for(i in 1:nrow(local_data)) {

# tm_data <- paste(tm_data, local_data[i,]$DOC_TEXT, sep=" ")

# }

txt_corpus <- Corpus (VectorSource (local_data$DOC_TEXT))

The idea is that you avoid a row-by-row for loop. In my dataset, it seems to save about 30-50% of the execution time.

Again, thanks for sharing. Cool stuff. Especially making it work with APEX.

Yes you are correct. Thanks for the comment.

DeleteIt is easier and quicker to vectorize the data.

It is good to hear you found it useful.

What version of APEX and ORE did you use?

HI, We are using Apex 5.1.1 . We want to make use of Oracle Data Mining and very new to ODM. DO you suggest any documents or examples. Our plan is to use ORE in future too.

DeleteThanks,

Ashwin

I've some examples on my blog of using Oracle Data Mining with APEX, also ORE with APEX, and I've examples in my books too.

DeleteHI, We are using Apex 5.1.1 . We want to make use of Oracle Data Mining and very new to ODM. DO you suggest any documents or examples. Our plan is to use ORE in future too.

ReplyDeleteThanks,

Ashwin