As 2014 draws to a close I working on finishing off a number of tasks and projects. One of these tasks is an annual one for me. The task is to list all the things I've done as an Oracle ACE Director. If has been a very busy year, not just with ACE activities but also work wise too. That will explain why I have been a bit quiet on the blogging side of things in recent months.

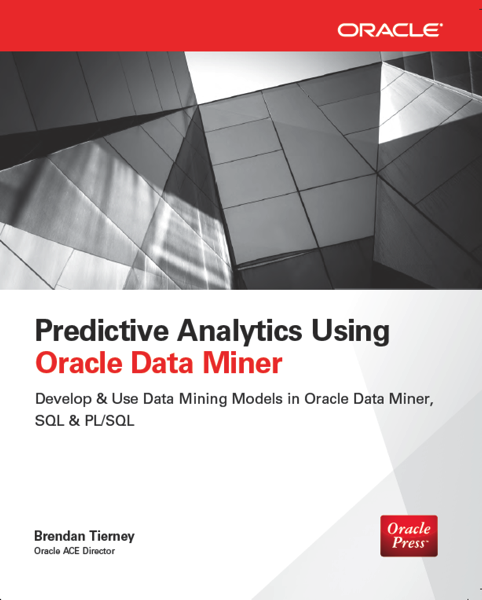

In 2014 I one major highlight. It was the publication of my book Predictive Analytics using Oracle Data Miner by Oracle Press. Many thanks for everyone involved in writing this book, especially my family and the people in Oracle Press who gave me the opportunity.

Here is my summary.

Conferences

January : BIWA Summit : 2 presentations (San Francisco, USA) **

March : OUG Ireland Conference (Dublin, Ireland)

April : OUG Norway : 2 presentations (Oslo, Norway) **

June : OUG Finland : 2 presentations (Helsinki, Finland) **

June : Oracle EMEA Data Warehousing Global Leaders Forum (Dublin, Ireland)

August : OUG Panama : 2 presentations (Panama City, Panama) **

August : OUG Costa Rica : 3 presentations (San Jose, Costa Rica) **

August : OUG Mexico : 2 presentations (Mexico City, Mexico) **

September : Oracle Open World (San Francisco, USA) **

December : UKOUG Tech15 : 2 presentations (Liverpool, UK)

December : UKOUG Apps15 (Liverpool, UK)

That is 19 hours of presenting this year.

** Many thanks to the Oracle ACE Director programme for funding the flights and hotels for these conferences. All other expenses and conferences I paid for out of my own pocket.

My ODM Book

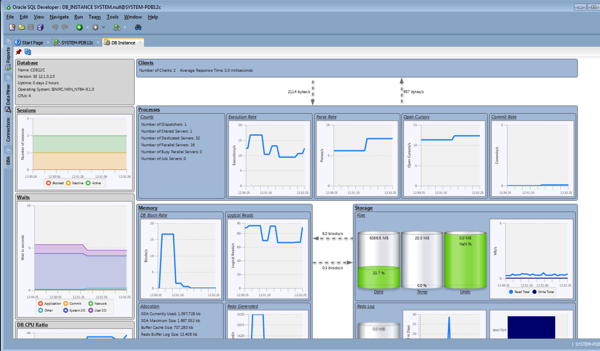

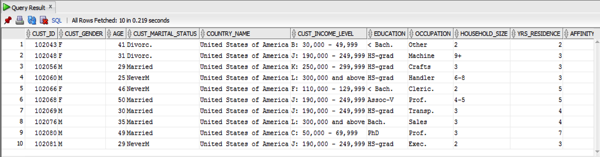

On the 8th August my book titled Predictive Analytics using Oracle Data Miner, was published by Oracle Press. It all began 12 months and 2 weeks previously. I had the book written and the technical edits done by the middle of February (2014). Between March and June the Copy edits and layouts where completed. The book is ideal for any data scientist, Oracle developer, Oracle architect and Oracle DBA, who want to use the in-databse data mining functionality. That way they can use and build upon their existing SQL and PL/SQL skills to perform predictive analytics.

The book is available on Amazon and comes in Print and eBook formats

Oracle Open World

This year I got to go to my second ACE Director briefing. This is held on the Thursday and Friday before OOW. At the briefing we get lots of Very senior people coming in to tell us what is happening with the products in their area and what the plans are over the next 12 to 18 months. Lots of what we are told is all under NDA. My favourite part of this briefing is when Thomas Kurian comes in and talks for about 90 minutes. No slides, no notes. The first 15 minutes is him telling us what Larry & Co are going to announce at OOW, that are the main product directions etc. Then he opens to the floor for questions. You can ask him anything about the set of Oracle products (>3000) and he will explain in detail what is happen. He even commented on the plans for the Oracle Games Console this year!!!

This year I had the opportunity to present at OOW again. It was a joint presentation with Roel Hartman and we had the pleasure of being one of the first presentations at OOW at 8:30am on the Sunday morning. Despite the early start we have really good turn out for our presentation.

Then I got to enjoy OOW with all the various activities, presentations, entertainment and hanging out at the OTN lounge with the other ACEs and ACEDs.

Blog Posts

One of the things I really like doing is playing with various features of Oracle and then writing some blog posts about them. Most of what I blog about evolves around the SQL & PL/SQL Statistics functions and the Advanced Analytics Option, comprising Oracle Data Mining and Oracle R Enterprise. In addition to these blog posts I also have posts relating to various Oracle User Group activities. So there is a good mixture of material on the blog.

In 2014 I have written 60 blog posts (including this one). This number is a little be less than previous years and perhaps the main reason for that is due to me being extremely busy with various project work this year.

OTN Articles

OTN has accepted three articles from me in 2014. I was delighted about these acceptances and I'm looking forward to writing some more articles in 2015 for them.

- Sentiment Analysis using Oracle Data Miner

- ROracle : How to get Started and Commands you need to Know

- Predictive Queries in 12c

I have a few more ideas for articles and I will be writing these in 2015. We will have to wait and see if OTN will accept them.

My Oracle Magazine Collection & Reviews

You may or may not be aware that I've been collecting Oracle Magazine for over 20 years now. I have nearly the entire collection of Oracle Magazine going back to the very first edition. Check out the collection here. You will see that I'm missing a few and these are highlighted by the grey boxes. If you do have any of these and you would like to donate to my collection then please get in touch.

One of the things I like to blog about is on some of these old Oracle Magazines. If you go to my Oracle Magazine collection page you will see the past editions that I have writing a review of. Click on the links to view the blog post review an edition.

In 2014 I have written reviews of the following:

OUG Activities

The Oracle User Group in Ireland (OUG Ireland) has continued to grow this year in membership but also with the number of attendees at our events. In March of each year we have our flagship event which is our annual conference. This year we have almost 300 people and unfortunately people had to turned away at the door because we had headed the maximum limit on the number of attendees for the event. Planing has already commenced for 2015 and the call for presentations is now open. Hopefully 2015 will be bigger and better that 2014. We had a second day at the conference this year where we had Tom Kyte give a full day seminar. Again this was fully booked out for weeks/months before hand. In March 2015 we will be having a second day of the conference with Maria Colgan giving a one day workshop/seminar on the In-Memory option and the Optimiser. You cannot book your place on this seminar yet but then it does open make sure to book your place quick as I'm sure it will book out very quickly.

We also had a number of TECH and BI SIGs and the number of attendees has significantly grown over the past few years. This is fantastic and hopefully this will continue. If it does then maybe we might be able to put on more SIG events.

In the editor of Oracle Scene Magazine which is published by the UKOUG. This was my first full year as editor after spending many years as deputy editor. In 2014 we have published three editions of Oracle Scene and I would like to thanks everyone who has submitted an article. You have helped grow the quality of the contents and also grow the readership numbers. The calls for articles for the Spring edition is now open.

My Oracle Data Science newsletter & My Oracle User Group Weekly newsletter

A couple of years ago I set up a news aggregator based on Twitter feeds and on updates from certain websites. I've divided these into two different newsletters. The first is My Oracle Data Science News and as you might guess it is focused on the worlds of Data Science, Predictive Analytics and related developments with a bit of a focus towards the Oracle world. This newsletter gets published each day.

My second newsletter is focused on Oracle User Group activities around the World and is again based on the various Twitter handles of the Oracle User Groups. I'm include over 40 OUG Twitter handles in the aggregator so I should be picking up almost everyone. If you discover your OUG is not being included then drop me an email and I'll add you to the list. This newsletter goes out every Friday.

Plans for 2015 so far

The start of 2015 is already very busy and I'm already booked for 3 conferences BIWA Summit (CA, USA), OUG Norway and OUG Ireland.

Planing for OUG Ireland is under ways and we are hoping to build on the successes we have had over the past few years.

So as editor of Oracle Scene magazine we are planning for our first issue in 2015, the call for articles is open and we have been busy recruiting authors of articles on specifics.

I'm sure I've forgotten a few things, I usually do.

It has been a fun year. I've made lots of friends around the World and I look forward to meeting you all at some conference in 2015.