This blog post will look at how you can use the Regression feature in Oracle Data Miner (ODM) to predict the lean/tilt of the Leaning Tower of Pisa in the future.

This is a well know regression exercise, and it typically comes with a set of know values and the year for these values. There are lots of websites that contain the details of the problem. A summary of it is:

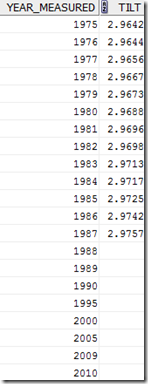

The following table gives measurements for the years 1975-1985 of the "lean" of the Leaning Tower of Pisa. The variable "lean" represents the difference between where a point on the tower would be if the tower were straight and where it actually is. The data is coded as tenths of a millimetre in excess of 2.9 meters, so that the 1975 lean, which was 2.9642.

Given the lean for the years 1975 to 1985, can you calculate the lean for a future date like 200, 2009, 2012.

Step 1 – Create the table

Connect to a schema that you have setup for use with Oracle Data Miner. Create a table (PISA) with 2 attributes, YEAR_MEASURED and TILT. Both of these attributes need to have the datatype of NUMBER, as ODM will ignore any of the attributes if they are a VARCHAR or you might get an error.

CREATE TABLE PISA

(

YEAR_MEASURED NUMBER(4,0),

TILT NUMBER(9,4)

);

Step 2 – Insert the data

There are 2 sets of data that need to be inserted into this table. The first is the data from 1975 to 1985 with the known values of the lean/tilt of the tower. The second set of data is the future years where we do not know the lean/tilt and we want ODM to calculate the value based on the Regression model we want to create.

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1975,2.9642);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1976,2.9644);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1977,2.9656);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1978,2.9667);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1979,2.9673);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1980,2.9688);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1981,2.9696);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1982,2.9698);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1983,2.9713);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1984,2.9717);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1985,2.9725);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1986,2.9742);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1987,2.9757);

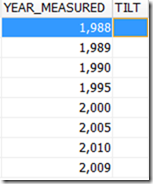

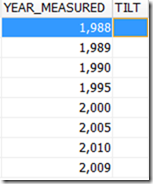

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1988,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1989,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1990,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1995,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (2000,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (2005,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (2010,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (2009,null);

Step 3 – Start ODM and Prepare the data

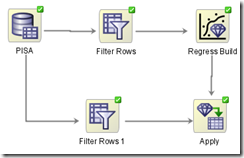

Open SQL Developer and open the ODM Connections tab. Connect to the schema that you have created the PISA table in. Create a new Project or use an existing one and create a new Workflow for your PISA ODM work.

Create a Data Source node in the workspace and assign the PISA table to it. You can select all the attributes..

The table contains the data that we need to build our regression model (our training data set) and the data that we will use for predicting the future lean/tilt (our apply data set).

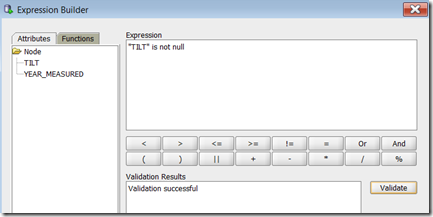

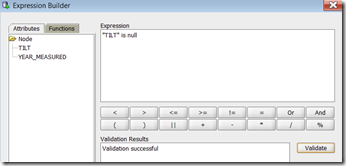

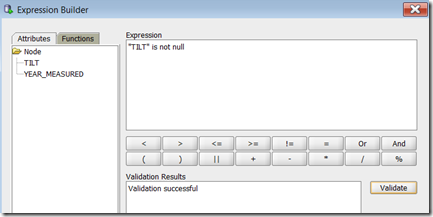

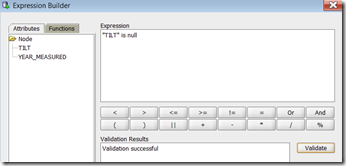

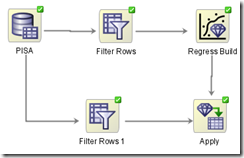

We need to apply a filter to the PISA data source to only look at the training data set. Select the Filter Rows node and drag it to the workspace. Connect the PISA data source to the Filter Rows note. Double click on the Filter Row node and select the Expression Builder icon. Create the where clause to select only the rows where we know the lean/tilt.

Step 4 – Create the Regression model

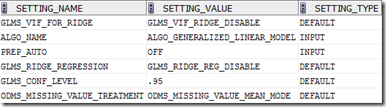

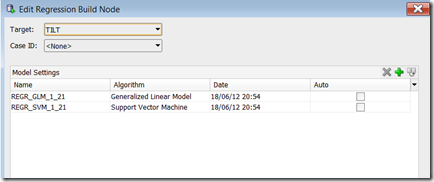

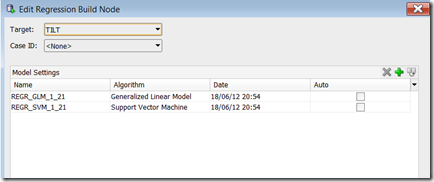

Select the Regression Node from the Models component palette and drop it onto your workspace. Connect the Filter Rows node to the Regression Build Node.

Double click on the Regression Build node and set the Target to the TILT variable. You can leave the Case ID at <None>. You can also select if you want to build a GLM or SVM regression model or both of them. Set the AUTO check box to unchecked. By doing this Oracle will not try to do any data processing or attribute elimination.

You are now ready to create your regression models.

To do this right click the Regression Build node and select Run. When everything is finished you will get a little green tick on the top right hand corner of each node.

Step 5 – Predict the Lean/Tilt for future years

The PISA table that we used above, also contains our apply data set

We need to create a new Filter Rows node on our workspace. This will be used to only look at the rows in PISA where TILT is null. Connect the PISA data source node to the new filter node and edit the expression builder.

Next we need to create the Apply Node. This allows us to run the Regression model(s) against our Apply data set. Connect the second Filter Rows node to the Apply Node and the Regression Build node to the Apply Node.

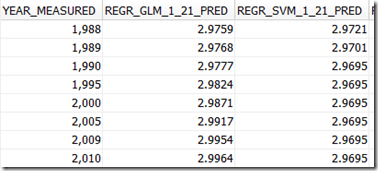

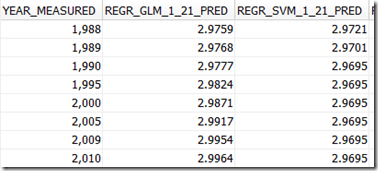

Double click on the Apply Node. Under the Apply Columns we can see that we will have 4 attributes created in the output. 3 of these attributes will be for the GLM model and 1 will be for the SVM model.

Click on the Data Columns tab and edit the data columns so that we get the YEAR_MEASURED attribute to appear in the final output.

Now run the Apply node by right clicking on it and selecting Run.

Step 6 – Viewing the results

Where we get the little green tick on the Apply node we know that everything has run and completed successfully.

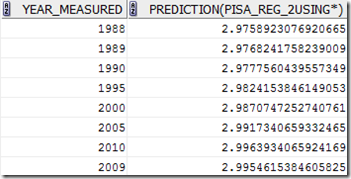

To view the predictions right click on the Apply Node and select View Data from the menu.

We can see the the GLM mode gives the results we would expect but the SVM does not.