I've been selected to give one of these talks, and I've given this talk at some live Oracle Code events and at JavaOne back in October.

The present is pre-recorded and I recorded this video back in September.

I hope to be online at the end of some of these presentations to answer any questions, but unfortunately due to changes with my work commitments I may not be able to be online for all of them.

The moderator for these events will take your questions (or you can send them to me here) and I will write a blog post answering all your questions.

I've been selected to give one of these talks, and I've given this talk at some live Oracle Code events and at JavaOne back in October.

The present is pre-recorded and I recorded this video back in September.

I hope to be online at the end of some of these presentations to answer any questions, but unfortunately due to changes with my work commitments I may not be able to be online for all of them.

The moderator for these events will take your questions (or you can send them to me here) and I will write a blog post answering all your questions.

Monday, December 11, 2017

Oracle Code Online December 2017

I've been selected to give one of these talks, and I've given this talk at some live Oracle Code events and at JavaOne back in October.

The present is pre-recorded and I recorded this video back in September.

I hope to be online at the end of some of these presentations to answer any questions, but unfortunately due to changes with my work commitments I may not be able to be online for all of them.

The moderator for these events will take your questions (or you can send them to me here) and I will write a blog post answering all your questions.

I've been selected to give one of these talks, and I've given this talk at some live Oracle Code events and at JavaOne back in October.

The present is pre-recorded and I recorded this video back in September.

I hope to be online at the end of some of these presentations to answer any questions, but unfortunately due to changes with my work commitments I may not be able to be online for all of them.

The moderator for these events will take your questions (or you can send them to me here) and I will write a blog post answering all your questions.

Wednesday, November 22, 2017

Make SQL Great Again baseball cap

Let me know if you would like to order one.

They cost €15 + P&P

Friday, September 29, 2017

Irish people presenting at OOW

Here is a list of presentations at Oracle Open World and JavaOne in 2017, that will be given by people and partners based in Ireland.

(I'll update this list if I find additional presentations)

| Day | Time | Presentation | Location |

|---|---|---|---|

| Sunday | 13:45-14:30 | SQL: One Language to Rule All Your Data [OOW SUN1238]

Brendan Tierney, Oralytics SQL is a very powerful language that has been in use for almost 40 years. SQL comes with many powerful techniques for analyzing your data, and you can analyze data outside the database using SQL as well. Using the new Oracle Big Data SQL it is now possible to analyze data that is stored in a database, in Hadoop, and in NoSQL all at the same time. This session explores the capabilities in Oracle Database that allow you to work with all your data. Discover how SQL really is the unified language for processing all your data, allowing you to analyze, process, run machine learning, and protect all your data. Hopefully this presentation will be a bit of Fun! For those who have been working with the database for a long time, we can sometimes forget what we can really do. For those starting out in the career may not realise what the database can do. The presentation delivers an important message while having a laugh or two (probably at me). | Marriott Marquis (Golden Gate Level) - Golden Gate C1/C2 |

| Monday | 16:30-17:15 | ESB Networks Automates Core IT Infrastructure and Grid Operations [CON7878]

Simon Holt, DBA / Technical Architect, ESB Networks Andrew Walsh, OMS Application Support, ESB In this session learn how ESB Networks deployed Oracle Utilities Network Management System Release 1.12 on a complete Oracle SuperCluster. Hear about the collaboration between multiple Oracle business units and the in-house expertise that delivered an end-to-end solution. This upgrade is an important step toward expanding ESB Network’s future network operations vision. Her about the challenges, the process of choosing a COTS solution, cybersecurity, and implementation. The session also explores the benefits the new system delivered when managing the effects of large-scale weather events, as well as the technical challenges of deploying a combined hardware and software solution. | Park Central (Floor 2) - Metropolitan I |

| Monday | 16:45-17:30 | Automation and Innovation for Application Management and Support [CON7862]

Raja Roy, Associate Partner, IBM Ireland Automation and innovation are transforming the way application support and development projects are being executed. Market trends show three fundamental shifts: innovation to improve quality of service delivery, the emergence of knowledge-based systems with capabilities for self-service and self-heal, and leveraging the power of the cloud to move capital expenditures to operating expenditures for enhanced functionality. In this session see how IBM introduced innovation in deployments globally to help customers achieve employee and business productivity and enhanced quality of services. | Moscone West - Room 3022 |

| Tuesday | 12:15-13:00 | DMigrating Oracle E-Business Suite to Oracle IaaS: A Customer Journey [CON1848]

Ken MacMahon, IT, Version 1 Ken Lynch, Head of IT, Irish Life Simon Joyce, Consultant / Contractor, Version 1 Software In this session hear about a leading global insurance provider’s experience of migrating Oracle E-Business Suite to Oracle Cloud. This session includes a discussion of the considerations for Oracle Iaas/PaaS vs. alternatives, the total cost of ownership for Oracle IaaS vs. on-premises solutions, the key project and support issues, the benefits of IaaS, and tips and tricks. Gain insights that can help others on their journey with Oracle IaaS generally and with Oracle E-Business Suite specifically. | Moscone West - Room 2001 |

| Wednesday | 14:00-14:45 | Ireland's An Post: Customer Analytics Using Oracle Analytics Cloud [CON7176]

Tony Cassidy, CEO, Vertice John Hagerty, Oracle An Post, the Republic of Ireland’s state-owned provider of postal services, is an organization in transformation. It has used data and analytics to create innovations that led to cost savings and better sustainability. The current focus—customer analytics for a new line of business called Parcels and Packets—utilizes Oracle Analytics Cloud to externalize pertinent data to clients through a portal in a secure, effective, and easy-to-manage environment. In this session hear from An Post and its partner, Vertice, as they discuss the architecture and solution, along with recommendations for ensuring success using Oracle Analytics Cloud. | Moscone West - Room 3009 |

| Thursday | 13:45-14:30 | Is SQL the Best Language for Statistics and Machine Learning? [OOW and JavaOne CON7350]

Brendan Tierney, Oralytics Did you know that Oracle Database comes with more than 300 statistical functions? And most of these statistical functions are available in all versions of Oracle Database? Most people do not seem to know this. When we hear about people performing statistical analytics, we hear them talking about Excel and R, but what if we could do statistical analysis in the database without having to extract any data onto client machines? This presentation explores the various statistical areas available in Oracle Database and gives several demonstrations. We can also greatly expand our statistical capabilities by using Oracle R Enterprise with the embedded capabilities in SQL. This presentation is just one of the 14 presentations that are scheduled for the Thursday! I believe this session is already fully booked, but you can still add yourself to the wait list. | Marriott Marquis (Golden Gate Level) - Golden Gate B |

Tuesday, September 19, 2017

My Oracle Open World 2017 Presentations

Oracle Open World 2017 will be happening very soon (1st-5th October). Still lots to do before I can get on that plane to San Francisco.

This year I'll be giving 2 presentations (see table below). One on the Sunday during the User Groups Sunday sessions. I've been accepted on the EMEA track. I then get a few days off to enjoy and experience OOW until Thursday when I have my second presentation that is part of JavaOne (I think!)

My OOW kicks off on Friday 29th September with the ACE Director briefing at Oracle HQ, after flying to SFO on Thursday 28th. This year it is only for one day instead of two days. I really enjoy this event as we get to learn and see what Oracle will be announcing at OOW as well as some things that will be coming out during the following few months.

| Day | Time | Presentation | Location |

|---|---|---|---|

| Sunday | 13:45-14:30 | SQL: One Language to Rule All Your Data [OOW SUN1238]

SQL is a very powerful language that has been in use for almost 40 years. SQL comes with many powerful techniques for analyzing your data, and you can analyze data outside the database using SQL as well. Using the new Oracle Big Data SQL it is now possible to analyze data that is stored in a database, in Hadoop, and in NoSQL all at the same time. This session explores the capabilities in Oracle Database that allow you to work with all your data. Discover how SQL really is the unified language for processing all your data, allowing you to analyze, process, run machine learning, and protect all your data. Hopefully this presentation will be a bit of Fun! For those who have been working with the database for a long time, we can sometimes forget what we can really do. For those starting out in the career may not realise what the database can do. The presentation delivers an important message while having a laugh or two (probably at me). | Marriott Marquis (Golden Gate Level) - Golden Gate C1/C2 |

| Thursday | 13:45-14:30 | Is SQL the Best Language for Statistics and Machine Learning? [OOW and JavaOne CON7350] Did you know that Oracle Database comes with more than 300 statistical functions? And most of these statistical functions are available in all versions of Oracle Database? Most people do not seem to know this. When we hear about people performing statistical analytics, we hear them talking about Excel and R, but what if we could do statistical analysis in the database without having to extract any data onto client machines? This presentation explores the various statistical areas available in Oracle Database and gives several demonstrations. We can also greatly expand our statistical capabilities by using Oracle R Enterprise with the embedded capabilities in SQL. This presentation is just one of the 14 presentations that are scheduled for the Thursday! I believe this session is already fully booked, but you can still add yourself to the wait list. | Marriott Marquis (Golden Gate Level) - Golden Gate B |

My flights and hotel have been paid by OTN as part of the Oracle ACE Director program. Yes this costs a lot of money and there is no way I'd be able to pay these costs. Thank you.

My diary for OOW is really full. No it is completely over booked. It is just mental. Between attending conference session, meeting with various product teams (we only get to meet at OOW), attending various community meet-ups, this year I get to attend some events for OUG leaders (representing UKOUG), spending some time on the EMEA User Group booth, various meetings with people to discuss how they can help or contribute to the UKOUG, then there is Oak Table World, trying to check out the exhibition hall, spend some time at the OTN/ODC hangout area, getting a few OTN t-shirts, doing some book promotions at the Oracle Press shop, etc., etc., etc. I'm exhausted just thinking about it. Mosts days start at 7am and then finish around 10pm.

I'll need a holiday when I get home! but it will be straight back to work :-(

If you are at OOW and want to chat then contact me via DM on Twitter or WhatsApp (these two are best) or via email (this will be the slowest way).

I'll have another blog post listing the presentations from various people and partners from the Republic of Ireland who are speaking at OOW.

Tuesday, September 5, 2017

Monday, August 28, 2017

How to speed up your Oracle Data Mining with in-memory and parallel

Have you have found running a workflow in Oracle Data Miner slow or running the scripts in the database slow ?

No. Good, because I haven't found it slow.

But (there is always a but) it really depends on the volume of data your are dealing with. For the vast majority of us who aren't of the size of google, amazon, etc have data volumes that are not that large really and a basic server can process many millions of records extremely quickly using Oracle Data Mining.

But what if we have a large volume of data. In one recent project I had a data set containing over 3.5 billion records. Now that is big data. All of this data sitting in an Oracle Database.

So how can we process over 3.5 billion records in a couple of seconds, building 4 machine learning models in that time? Is that really possible with just using an Oracle Database? Yes is the answer and very easily. (Surely I needed Hadoop and Spark to process this data? Nope!)

The Oracle Data Miner (ODMr) tool comes with a new feature in SQL Developer 4 (and higer) that allows you to manage using Parallel execution and the in-memory DB features. These can be accessed on the ODMr Worksheet tool bar.

The best time to look at these setting is when you have created your workflow and are ready to run it for the first time. When you click on the 'Performance Options' link, you will get the following window. It will display the list of nodes you have in the workflow and will then indicate if the Degree of Parallel and the In-Memory options can be set for each of the nodes.

The default values are shown and you can changes these. For example, in a lot of scenarios you might prefer to leave the Degree of Parallel as System Determined. This will then use whatever the the default is for the database and controlled by the DBA, but if you want to specify a particular value then you can, for example setting the degree of parallel to 4 for the 'Class Build' node, in the above image. Similarly for the in-memory option, this will only be available for nodes where the in-memory option would be applicable. This will be where there is a lot of data processing (preparing data, transforming data, performing specific statistics, etc) and for storing any data that is generated by Oracle Data Mining.

But what if you want to change the default values. You can change these at a global level within the SQL Developer Preferences. Here you can set the default to be used for each of the different types of Oracle Data Mining nodes.

I mentioned at the start that I've been able to build 4 machine learning models using Oracle Data Mining on a data set of over 3.5 billion records, all in a couple of seconds. In my scenario Parallel was set to 16 and we didn't use in-memory as we didn't have the licence for it. You can see that machine learning at lighting speed (ish) is possible. This timing is only for building the models, which is the step that consumes the most about of resources and time. When it comes to scoring the data, that is lighting fast. In may scenario, scoring over 300,000 was less than a second, and I didn't use parallel or anything else to speed things up. Because we didn't need to.

Go give it a try!

Monday, August 21, 2017

Scheduling ODMr Workflows in SQL Developer 4.2+

Just above the workflow worksheet we have a row of icon buttons. In the above image one of these is highlighted by a red box. This is the workflow scheduler. So go ahead on click on it.

Just above the workflow worksheet we have a row of icon buttons. In the above image one of these is highlighted by a red box. This is the workflow scheduler. So go ahead on click on it.

In most cases you will want to run the entire workflow. The default option presented to is 'All Nodes'. If you would only like a subset of the nodes to run, you can click-on or select the node in the workflow and then click on the scheduler icon. In our example we are going to run the entire workflow, so select 'All Nodes' from the menu.

In most cases you will want to run the entire workflow. The default option presented to is 'All Nodes'. If you would only like a subset of the nodes to run, you can click-on or select the node in the workflow and then click on the scheduler icon. In our example we are going to run the entire workflow, so select 'All Nodes' from the menu.

The main scheduler window will open. Here you can set the Start Date and time of the first run, what the Repeat frequency is (none, every day, every week or custom) and to End the Repeat (Never, After, On Date). To schedule a once off run of the workflow just set the Date and Time, set the Repeat to 'None' and End Repeat should disappear in this instance. If Repeat was set to another value then you can set a value for End Repeat.

Go ahead and run the scheduler by clicking on the OK button.

The main scheduler window will open. Here you can set the Start Date and time of the first run, what the Repeat frequency is (none, every day, every week or custom) and to End the Repeat (Never, After, On Date). To schedule a once off run of the workflow just set the Date and Time, set the Repeat to 'None' and End Repeat should disappear in this instance. If Repeat was set to another value then you can set a value for End Repeat.

Go ahead and run the scheduler by clicking on the OK button.

A Scheduled Jobs window should open that will display the details of the scheduled job. When this job is run in the database, this will be shown in the Workflow Jobs window. Here you can see and monitor the progress of the of the workflow.

A Scheduled Jobs window should open that will display the details of the scheduled job. When this job is run in the database, this will be shown in the Workflow Jobs window. Here you can see and monitor the progress of the of the workflow.

and that's it. Nice an simple.

But there is a something you needed to be WARNED about. When you schedule a workflow, Oracle Data Miner will lock the workflow. This is to ensure that no changes can be made to the scheduled workflow. This is indicated with the Locked button appearing on the icon menu. If you click on this button to unlock the workflow, it will also cancel your scheduled jobs associated with this workflow.

and that's it. Nice an simple.

But there is a something you needed to be WARNED about. When you schedule a workflow, Oracle Data Miner will lock the workflow. This is to ensure that no changes can be made to the scheduled workflow. This is indicated with the Locked button appearing on the icon menu. If you click on this button to unlock the workflow, it will also cancel your scheduled jobs associated with this workflow.

Also when the scheduled workflow is finished, the workflow will remain locked. So you will have to click on this Locked button to unlock the workflow.

There are a few additional advanced features. These can be found by clicking on the 'Advanced...' button in the main scheduler window. The first table displayed allows you to specify if you want an email sent for the different stages of the scheduled job. The second tab allows you to set the Job Priority, Max Failures, Max Run Duration and Schedule Limits.

Also when the scheduled workflow is finished, the workflow will remain locked. So you will have to click on this Locked button to unlock the workflow.

There are a few additional advanced features. These can be found by clicking on the 'Advanced...' button in the main scheduler window. The first table displayed allows you to specify if you want an email sent for the different stages of the scheduled job. The second tab allows you to set the Job Priority, Max Failures, Max Run Duration and Schedule Limits.

Monday, August 7, 2017

Auto enabling APPROX_* function in the Oracle Database

With the releases of 12.1 and 12.2 of Oracle Database we have seen some new functions that perform approximate calculations. These include:

- APPROX_COUNT_DISTINCT

- APPROX_COUNT_DISTINCT_DETAIL

- APPROX_COUNT_DISTINCT_AGG

- APPROX_MEDIAN

- APPROX_PERCENTILE

- APPROX_PERCENTILE_DETAIL

- APPROX_PERCENTILE_AGG

These functions can be used when approximate answers can be used instead of the exact answer. Yes can have many scenarios for these and particularly as we move into the big data world, the ability to process our data quickly is slightly more important and exact numbers. For example, is there really a difference between 40% of our customers being of type X versus 41%. The real answer to this is, 'It Depends!', but for a lot of analytical and advanced analytical methods this difference doesn't really make a difference.

There are various reports of performance improvement of anything from 6x to 50x with the response times of the queries that are using these functions, instead of using the more traditional functions.

If you are a BI or big data analyst and you have build lots of code and queries using the more traditional functions. But what if you now want to use the newer functions. Does this mean you have go and modify all the code you have written over the years? you can imagine getting approval to do this!

The simple answer to this question is 'No'. No you don't have to change any code, but with some parameter changes for the DB or your session you can tell the database to automatically switch from using the traditional functions (count, etc) to the newer more optimised and significantly faster APPROX_* functions.

So how can you do this magic?

First let us see what the current settings values are:

SELECT name, value

FROM v$ses_optimizer_env

WHERE sid = sys_context('USERENV','SID')

AND name like '%approx%';

Now let us run a query to test what happens using the default settings (on a table I have with 10,500 records).

set auto trace on select count(distinct cust_id) from test_inmemory; COUNT(DISTINCTCUST_ID) ---------------------- 1500 Execution Plan ---------------------------------------------------------- Plan hash value: 2131129625 -------------------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | -------------------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 1 | 13 | 70 (2)| 00:00:01 | | 1 | SORT AGGREGATE | | 1 | 13 | | | | 2 | VIEW | VW_DAG_0 | 1500 | 19500 | 70 (2)| 00:00:01 | | 3 | HASH GROUP BY | | 1500 | 7500 | 70 (2)| 00:00:01 | | 4 | TABLE ACCESS FULL| TEST_INMEMORY | 10500 | 52500 | 69 (0)| 00:00:01 | --------------------------------------------------------------------------------------

Let us now set the automatic usage of the APPROX_* function.

alter session set approx_for_aggregation = TRUE; SQL> select count(distinct cust_id) from test_inmemory; COUNT(DISTINCTCUST_ID) ---------------------- 1495 Execution Plan ---------------------------------------------------------- Plan hash value: 1029766195 --------------------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | --------------------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 1 | 5 | 69 (0)| 00:00:01 | | 1 | SORT AGGREGATE APPROX| | 1 | 5 | | | | 2 | TABLE ACCESS FULL | TEST_INMEMORY | 10500 | 52500 | 69 (0)| 00:00:01 | ---------------------------------------------------------------------------------------

We can see above that the APPROX_* equivalent function was used, and slightly less work. But we only used this on a very small table.

The full list of session level settings is:alter session set approx_for_aggregation = TRUE; alter session set approx_for_aggregation = FALSE; alter session set approx_for_count_distinct = TRUE; alter session set approx_for_count_distinct = FALSE; alter session set approx_for_percentile = 'PERCENTILE_CONT DETERMINISTIC'; alter session set approx_for_percentile = PERCENTILE_DISC; alter session set approx_for_percentile = NONE;

Or at a system wide level:

alter system set approx_for_aggregation = TRUE; alter system set approx_for_aggregation = FALSE; alter system set approx_for_count_distinct = TRUE; alter system set approx_for_count_distinct = FALSE; alter system set approx_for_percentile = 'PERCENTILE_CONT DETERMINISTIC'; alter system set approx_for_percentile = PERCENTILE_DISC; alter system set approx_for_percentile = NONE;

And to reset back to the default settings:

alter system reset approx_for_aggregation; alter system reset approx_for_count_distinct; alter system reset approx_for_percentile;

Monday, July 31, 2017

Part 5 - The right to be forgotten (EU GDPR)s

This is the fifth part of series of blog posts on 'How the EU GDPR will affect the use of Machine Learning'

Article 17 is titled Right of Erasure (right to be forgotten) allows a person to obtain their data and for the data controller to ensure that the personal data is erased without any any delay.

This does not mean that their data can be flagged for non-contact, as I've seen done in many companies, only for the odd time when one of these people have been contacted.

It will also allow for people to choose to not take part in data profiling. Meaning that these people cannot be included in any of the input data sets. And should not be the scenario where they are included but they are flagged as not to be contacted in any post ML process where the consumers are contacted, just like I've seen in lots of places.

Click back to 'How the EU GDPR will affect the use of Machine Learning - Part 1' for links to all the blog posts in this series.

Monday, July 24, 2017

Part 4b - (Article 22: Profiling) Why me? and how Oracle 12c saves the day

This is the fourth part of series of blog posts on 'How the EU GDPR will affect the use of Machine Learning'

In this blog post (Part4b) I will examine some of the more technical aspects and how the in-database machine learning functions saves the day!

Probably in most cases where machine learning has been used and/or deployed in your company to analyse, profile and predict customers, it is more than likely that some sort of black box machine learning has been used.

Typical black box machine learning will include using algorithms like Neural Networks, but these can extended to other algorithms, within the context of the EU GDPR requirements, such as SVMs, GLM, etc. Additionally most companies don't just use one algorithm to make a decision on a customer. Many algorithms and rules based decision make can be used together, using some sort of voting system, to determine if a customer is targeted in a certain way.

Basically all of these do not really support the requirements of the EU GDPRs.

In most cases we need to go back to basics. Back to more simpler approaches of machine learning for customer profiling and prediction. This means no more, for now, ensemble models, unless you can explain why a customer was selected. This means having to use simple algorithms like Decision Trees, at a push Naive Bayes, and using some well defined rules based methods. All of these approaches allows us to see and understand why a customer was selected and based on Article 22 being able to explain why.

But there is some hope. Some of the commercial machine learning vendors already for some prediction insights built into their software. Very few if any open source solutions have this capability.

For example, Oracle introduced a new function called PREDICTION_DETAILS in Oracle 12.1c and this was expanded in Oracle 12.2c to cover all their in-database machine learning algorithms.

The following is an example of using this function for an SVM model. When you examine the boxes in the following image you an see that a slightly different set of attributes and the values of these attributes are listed. Each box corresponds to a different customer. This means we can give an explanation of why a customer was selected. Oracle 12c saves the day.

select cust_id,

prediction(clas_svm_1_27 using *) pred_value,

prediction_probability(clas_svm_1_27 using *) pred_prob,

prediction_details(clas_svm_1_27 using *) pred_details

from mining_data_apply_v;

If you have a look at other commercial machine learning solutions, you will find some give similar functionality or it will be available soon. Can we get the same level of detail from open source solutions. Not really unless you are using Decision Tress and maybe Naive Bayes. This means that companies that have gone done the pure open source for their machine learning may have to look at using alternative software and may have to folk out some hard earned dollars/euros to make sure that they are complainant with Article 22 of the EU GDPRs.

Click back to 'How the EU GDPR will affect the use of Machine Learning - Part 1' for links to all the blog posts in this series.

Monday, July 17, 2017

Part 4a - (Article 22: Profiling) Why me? and how Oracle 12c saves the day

This is the fourth part of series of blog posts on 'How the EU GDPR will affect the use of Machine Learning'

In this blog post (Part4a) I will discuss the specific issues relating to the use of machine learning algorithms and models. In the next blog post (Part 4a) I will examine some of the more technical aspects and how the in-database machine learning functions saves the day!

The EU GDPR has some rules that will affect the use of machine learning models for predicting customers.

As with all the other section of the EU GDPR, the use of machine learning and profiling of individuals does not affect organisations based in within Europe but affects all organisations around the globe who will be using these methods and associated data.

Article 22 of the EU GDPR deals with the “Automated individual decision-making, including profiling” and effectively creates a “right to explanation”. This means that an individual is entitled to an explanation of the decisions made by automated decision making models or profiling that has resulted in a decision being made about them. These new regulations present many challenges for organisations and their teams of data scientists.

To be able to give an explanation of the decision made by the machine learning models or by profile, requires the ability of the underlying models and their associated algorithms to be able to gives details of the model processing and how the decision about the individual has been obtained. For most machine learning models and algorithms this is generally not possible. For a limited set of algorithms, for example with decision trees, this is possible, but with other algorithms such as support vector machines, some regression models, and in particular neural networks, the ability to give these explanations is not possible. Some of these can be considered black box modelling (for neural networks) and grey box modelling for the others. But these algorithms are in widespread use in many organisations and are core to their predictive analytics solutions. This presents many challenges for organisations as they will need to look at alternative algorithms that many not have the same degree of predictive accuracy. With the recent rise of deep learning using neural networks, is extremely difficult to explain the multilayer neural net with various learned weights between each of the nodes at each layer.

Ensemble machine learning methods like Random Forests are also a challenge. Although the underlying machine learning algorithm is explainable, the ensemble approach of Random Forest, and other similar methods, result from an aggregation, averaging or voting process. Additionally, scenarios when machine learning models are combine with multiple other models, along with rules based solutions, where the predicted outcome is based on the aggregation or voting of all methods may no longer be useable. The ability to explain a predicted outcome using ensemble methods may not be possible and this will affect their continued use for predictive analytics.

In addition to the requirements of Article 22, Articles 13 and 14 state that the a person has a right to the meaningful information about the logic involved in profiling the person.

Over the past few years many of the commercially available machine learning solutions have been preparing for changes required to meet the EU GDPR. Some vendors have been able to add in greater model explanation features as well as specific explanations for each of the individual predictions. Many other vendors are will working on adding the required level of explanations and some of these many not be available in time for when the EU GDPR goes live in April 2018. This will present many challenges for organisations around the world who will be using data gathered within the EU region.

For machine learning based on open source languages and tools the EU GDPR present a very different challenge. While a small number of these come with some simple explanations for some of the more basic machine learning algorithms, there seems to be little information available on what work is currently being done to update these languages and tools. The limiting factor with making the required updates in the open source community lies with there being no commercial push to so. As a result of these limitation, many organisations may be forced into using commercial machine learning products, but for many other organisation the cost of doing so will be prohibitive.

It is clear that the tasks of building machine learning models have become significantly more complex with the introduction of the new EU GDPR. This complexity applies to the selection of what data can be used, ensuring there is no inherent discrimination in the machine learning models and the ability of these models to give an explanation of how the predicted outcome was determined. Companies around the World need to address these issues and in doing so may limit what software and algorithms that can be used for the customer profiling and predictive analytics. Although some of the commercially available machine learning languages and products can give the required insights, more product enhancements are required. Many challenges are facing machine learning open source community, with many research group only starting in recent months to look at how their languages, packages and tools can be enhanced to facilitate the requirements of the EU GDPR.

Click back to 'How the EU GDPR will affect the use of Machine Learning - Part 1' for links to all the blog posts in this series.

Wednesday, July 12, 2017

PRECIS R package

If you use R then you are very familiar with the SUMMARY function.

If you use R then you are very familiar with the name Hadley Wickham. He has produced some really cool packages for R.

He has produced a new R package and function that complements the commonly used SUMMARY R function.

The following outlines how you can install this new R package from GitHub (Hadley's GitHub is https://github.com/hadley/).

Install the R devtools package. This will allow you to download the package code from GitHub.

install.packages("devtools")

Install the package from Hadley's GitHub repository.

devtools::install_github("hadley/precis")

Load the library.

library(precis)

The following displays information produced by the SUMMARY and the PRECIS function.

> summary(mtcars)

mpg cyl disp hp drat wt

Min. :10.40 Min. :4.000 Min. : 71.1 Min. : 52.0 Min. :2.760 Min. :1.513

1st Qu.:15.43 1st Qu.:4.000 1st Qu.:120.8 1st Qu.: 96.5 1st Qu.:3.080 1st Qu.:2.581

Median :19.20 Median :6.000 Median :196.3 Median :123.0 Median :3.695 Median :3.325

Mean :20.09 Mean :6.188 Mean :230.7 Mean :146.7 Mean :3.597 Mean :3.217

3rd Qu.:22.80 3rd Qu.:8.000 3rd Qu.:326.0 3rd Qu.:180.0 3rd Qu.:3.920 3rd Qu.:3.610

Max. :33.90 Max. :8.000 Max. :472.0 Max. :335.0 Max. :4.930 Max. :5.424

qsec vs am gear carb

Min. :14.50 Min. :0.0000 Min. :0.0000 Min. :3.000 Min. :1.000

1st Qu.:16.89 1st Qu.:0.0000 1st Qu.:0.0000 1st Qu.:3.000 1st Qu.:2.000

Median :17.71 Median :0.0000 Median :0.0000 Median :4.000 Median :2.000

Mean :17.85 Mean :0.4375 Mean :0.4062 Mean :3.688 Mean :2.812

3rd Qu.:18.90 3rd Qu.:1.0000 3rd Qu.:1.0000 3rd Qu.:4.000 3rd Qu.:4.000

Max. :22.90 Max. :1.0000 Max. :1.0000 Max. :5.000 Max. :8.000

> precis(mtcars)

# data.frame [32 x 11]

name type precis

1 mpg dbl 10.4 [ 15.4 ( 19.2) 22.8] 33.9

2 cyl dbl 4 (11) 6 (7) 8 (14)

3 disp dbl 71.1 [121.0 (196.0) 334.0] 472.0

4 hp dbl 52 [ 96 ( 123) 180] 335

5 drat dbl 2.76 [ 3.08 ( 3.70) 3.92] 4.93

6 wt dbl 1.51 [ 2.54 ( 3.32) 3.65] 5.42

7 qsec dbl 14.5 [ 16.9 ( 17.7) 18.9] 22.9

8 vs dbl 0 (18) 1 (14)

9 am dbl 0 (19) 1 (13)

10 gear dbl 3 (15) 4 (12) 5 (5)

11 carb dbl 1 [ 2 ( 2) 4] 8

> precis(mtcars, histogram=TRUE)

# data.frame [32 x 11]

name type precis

1 mpg dbl 10.4 ▂▁▇▃▅▅▂▂▁▁▂▂ 33.9

2 cyl dbl 4 ▅▁▁▁▁▁▁▁▁▃▁▁▁▁▁▁▁▁▁▇ 8

3 disp dbl 71.1 ▅▁▁▃▇▂▁▁▁▁▃▁▃▁▅▁▁▁▁▁▁ 472.0

4 hp dbl 52 ▁▅▅▇▂▂▇▁▂▁▂▁▁▁▁ 335

5 drat dbl 2.76 ▂▂▇▂▁▅▇▃▂▁▁▁ 4.93

6 wt dbl 1.51 ▁▁▂▂▁▁▂▁▂▁▇▂▂▁▁▁▁▁▁▂▁ 5.42

7 qsec dbl 14.5 ▂▂▁▁▃▇▅▁▇▂▂▂▁▁▁▁▁ 22.9

8 vs dbl 0 ▇▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▅ 1

9 am dbl 0 ▇▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▅ 1

10 gear dbl 3 ▇▁▁▁▁▁▁▁▁▅▁▁▁▁▁▁▁▁▁▂ 5

11 carb dbl 1 ▅▇▁▂▁▇▁▁▁▁▁▁▁▁ 8

Monday, July 10, 2017

Part 3 - Ensuring there is no Discrimination in the Data and machine learning models

This is the third part of series of blog posts on 'How the EU GDPR will affect the use of Machine Learning'

The new EU GDPR has some new requirements that will affect what data can be used to ensure there is no discrimination. Additionally, the machine learning models needs to ensure that there is no discrimination with the predictions it will make. There is an underlying assumption that the organisation has the right to use the data about individuals and that this data has been legitimately obtained. The following outlines the areas relating to discrimination:- Discrimination based on unfair treatment of an individual based on using certain variables that may be inherently discriminatory. For example, race, gender, etc., and any decisions based on machine learning methods or not, that are based on an individual being part of one or more of these variables. This is particularly challenging for data scientists and it can limit some of the data points that can be included in their data sets.

- All data mining models need to tested to ensure that there is no discrimination built into them. Although the data scientist has removed any obvious variables that may cause discrimination, the machine learning models may have been able to discover some bias or discrimination based on the patterns it has discovered in the data.

- In the text preceding the EU GDPR (paragraph 71), details the requirements for data controllers to “implement appropriate technical and organizational measures” that “prevent, inter alia, discriminatory effects” based on sensitive data. Paragraph 71 and Article 22 paragraph 4 addresses discrimination based on profiling (using machine learning and other methods) that uses sensitive data. Care is needed to remove any associated correlated data.

- If one group of people are under represented in a training data set then, depending on the type of prediction being used, may unknowingly discriminate this group when it comes to making a prediction. The training data sets will need to be carefully partitioned and separate machine learning models built on each partition to ensure that such discrimination does not occur.

In the next blog post I will look at addressing the issues relating to Article 22 on the right to an explanation on outcomes automated individual decision-making, including profiling using machine learning and other methods.

Click back to 'How the EU GDPR will affect the use of Machine Learning - Part 1' for links to all the blog posts in this series.

Monday, July 3, 2017

Part 2 - Do I have permissions to use the data for data profiling?

This is the second part of series of blog posts on 'How the EU GDPR will affect the use of Machine Learning'

I have the data, so I can use it? Right?

I can do what I want with that data? Right? (sure the customer won't know!)

NO. The answer is No you cannot use the data unless you have been given the permission to use it for a particular task.

The GDPR applies to all companies worldwide that process personal data of European Union (EU) citizens. This means that any company that works with information relating to EU citizens will have to comply with the requirements of the GDPR, making it the first global data protection law.

The GDPR tightens the rules for obtaining valid consent to using personal information. Having the ability to prove valid consent for using personal information is likely to be one of the biggest challenges presented by the GDPR. Organisations need to ensure they use simple language when asking for consent to collect personal data, they need to be clear about how they will use the information, and they need to understand that silence or inactivity no longer constitutes consent.

You will need to investigate the small print of all the terms and conditions that your customers have signed. Then you need to examine what data you have, how and where it was collected or generated, and then determine if I have to use this data beyond what the original intention was. If there has been no mention of using the customer data (or any part of it) for analytics, profiling, or anything vaguely related to it then you cannot use the data. This could mean that you cannot use any data for your analytics and/or machine learning. This is a major problem. No data means no analytics and no targeting the customers with special offers, etc.

Data cannot be magically produced out of nowhere and it isn't the fault of the data science team if they have no data to use.

How can you over come this major stumbling block?

The first place is to review all the T&Cs. Identify what data can be used and what data cannot be used. One approach for data that cannot be used is to update the T&Cs and get the customers to agree to them. Yes they need to explicitly agree (or not) to them. Giving them a time limit to respond is not allowed. It needs to be explicit.

Yes this will be hard work. Yes this will take time. Yes it will affect what machine learning and analytics you can perform for some time. But the sooner you can identify these area, get the T&Cs updated, get the approval of the customers, the sooner the better and ideally all of this should be done way in advance on 25th May, 2018.

In the next blog post I will look at addressing Discrimination in the data and in the machine learning models.

Click back to 'How the EU GDPR will affect the use of Machine Learning - Part 1' for links to all the blog posts in this series.

Tuesday, June 27, 2017

How the EU GDPR will affect the use of Machine Learning - Part 1

On 5 December 2015, the European Parliament, the Council and the Commission reached agreement on the new data protection rules, establishing a modern and harmonised data protection framework across the EU. Then on 14th April 2016 the Regulations and Directives were adopted by the European Parliament.

The EU GDPR comes into effect on the 25th May, 2018.

Are you ready ?

The EU GDPR will affect every country around the World. As long as you capture and use/analyse data captured with the EU or by citizens in the EU then you have to comply with the EU GDPR.

Over the past few months we have seen a increase in the amount of blog posts, articles, presentations, conferences, seminars, etc being produced on how the EU GDPR will affect you. Basically if your company has not been working on implementing processes, procedures and ensuring they comply with the regulations then you a bit behind and a lot of work is ahead of you.

Like I said there was been a lot published and being talked about regarding the EU GDPR. Most of this is about the core aspects of the regulations on protecting and securing your data. But very little if anything is being discussed regarding the use of machine learning and customer profiling.

Do you use machine learning to profile, analyse and predict customers? Then the EU GDPRs affect you.

Article 22 of the EU GDPRs outlines some basic capabilities regarding machine learning, and in additionally Articles 13, 14, 19 and 21.

Over the coming weeks I will have the following blog posts. Each of these address a separate issue, within the EU GDPR, relating to the use of machine learning.

- Part 2 - Do I have permissions to use the data for data profiling?

- Part 3 - Ensuring there is no Discrimination in the Data and machine learning models.

- Part 4a - (Article 22: Profiling) Why me? and how Oracle 12c saves the day

- Part 4b - (Article 22: Profiling) Why me? and how Oracle 12c saves the day

- Part 5 - The right to be forgotten (EU GDPR)

Thursday, June 22, 2017

Installing Scala and Apache Spark on a Mac

The following outlines the steps I've followed to get get Scala and Apache Spark installed on my Mac. This allows me to play with Apache Spark on my laptop (single node) before deploying my code to a multi-node cluster.

1. Install Homebrew

Homebrew seems to be the standard for installing anything on a Mac. To install Homebrew run/usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

When prompted enter your system/OS password to allow the install to proceed.

2. Install xcode-select (if needed)

You may have xcode-select already installed. This tool allows you to install the languages using command line.

xcode-select --install

If it already installed then nothing will happen and you will get the following message.

xcode-select: error: command line tools are already installed, use "Software Update" to install updates

3. Install Scala

[If you haven't installed Java then you need to also do this.]

Use Homebrew to install scala.

brew install scala

4. Install Apache Spark

Now to install Apache Spark.

brew install apache-spark

5. Start Spark

Now you can start the Apache Spark shell.

spark-shell

6. Hello-World and Reading a file

The traditional Hello-World example.

scala> val helloWorld = "Hello-World" helloWorld: String = Hello-World

or

scala> println("Hello World")

Hello World

What is my current working directory.

scala> val whereami = System.getProperty("user.dir")

whereami: String = /Users/brendan.tierney

Read and process a file.

scala> val lines = sc.textFile("docker_ora_db.txt")

lines: org.apache.spark.rdd.RDD[String] = docker_ora_db.txt MapPartitionsRDD[3] at textFile at :24

scala> lines.count()

res6: Long = 36

scala> lines.foreach(println)

####################################################################

## Specify the basic DB parameters

## Copyright(c) Oracle Corporation 1998,2016. All rights reserved.##

## ##

##------------------------------------------------------------------

## Docker OL7 db12c dat file ##

## ##

## db sid (name)

####################################################################

## default : ORCL

## cannot be longer than 8 characters

##------------------------------------------------------------------

...

There will be a lot more on how to use Spark and how to use Spark with Oracle (all their big data stuff) over the coming months.

[I've been busy for the past few months working on this stuff, EU GDPR issues relating to machine learning, and other things. I'll be sharing some what I've been working on and learning in blog posts over the coming weeks]

Tuesday, May 23, 2017

Slides from the Ireland OUG Meetup May 2017

Here are some of the slides from our meetup on 11th May 2017.

The remaining slides will be added when they are available.

Thursday, April 27, 2017

OUG Ireland Meetup 11th May

The next OUG Ireland Meetup is happening on 11th May, in the Bank of Ireland Grand Canal Dock. This is a free event and is open to every one. You don't have to be a member to attend.

Following on from a very successful 2 day OUG Ireland Conference with over 250 attendees, we have organised our next meetup. This was mentioned during the opening session of the conference.

We typically have 2 presentations at each Meetup and on 11th May we have:

1. Oracle Analytics Cloud Service.

Oralce Analytics Cloud Service was only released a few weeks ago and we some local people who have been working with the beta and early adopter releases. They will be giving us some insights on this new product and how it compares with other analytics products like Oracle Data Visualization and OBIEE.Running Oracle DataGuard on RAC on Oracle 12c

The second presentation will be on using Oracle DataGuard on RAC on Oracle 12c. We have a very experienced DBA talking about his experiences of using these products how to workaround some key bugs and situations to be aware of for administration purposes. Lots of valuable information to be gained.

There will be some food and refreshments available for you to enjoy.

The Meetup will be in Bank of Ireland, Grand Canal Dock. This venue is a very popular locations for Meetups in Dublin.

Friday, April 21, 2017

Setting up Oracle Database on Docker

A couple of days ago it was announced that several Oracle images were available on the Docker Store.

This is by far the easiest Oracle Database install I have every done !

You simply have no excuse now for not installing and using an Oracle Database. Just go and do it now!

The following steps outlines what I did you get an Oracle 12.1c Database.

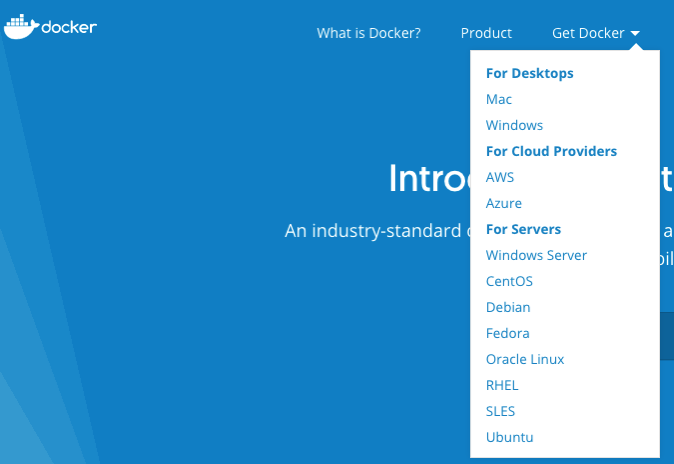

1. Download and Install Docker

There isn't much to say here. Just go to the Docker website, select the version docker for your OS, and just install it.

You will probably need to create an account with Docker.

After Docker is installed it will automatically start and and will be placed in your system tray etc so that it will automatically start each time you restart your laptop/PC.

2. Adjust the memory allocation

From the system tray open the Docker application. In the Advanced section allocate a bit more memory. This will just make things run a bit smoother. Be a bit careful on how much to allocate.

In the General section check the tick-box for automatically backing up Docker VMs. This is assuming you have back-ups setup, for example with Time Machine or something similar.

3. Download & Edit the Oracle Docker environment File

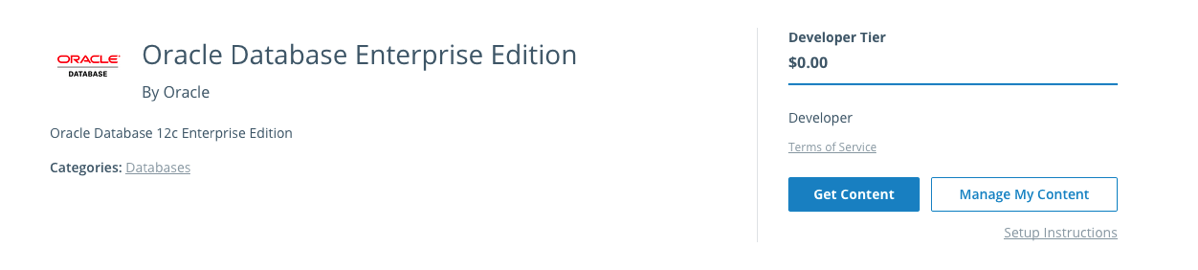

On the Oracle Database download Docker webpage, click on the the Get Content button.

You will have to enter some details like your name, company, job title and phone number, then click on the check-box, before clicking on the Get Content button. All of this is necessary for the Oracle License agreement.

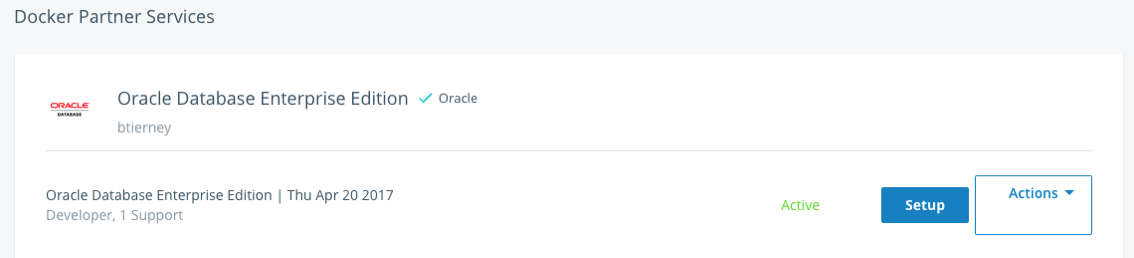

The next screen lists the Docker Services and Partner Services that you have signed up for.

Click on the Setup button to go to the webpage that contains some of the setup instructions.

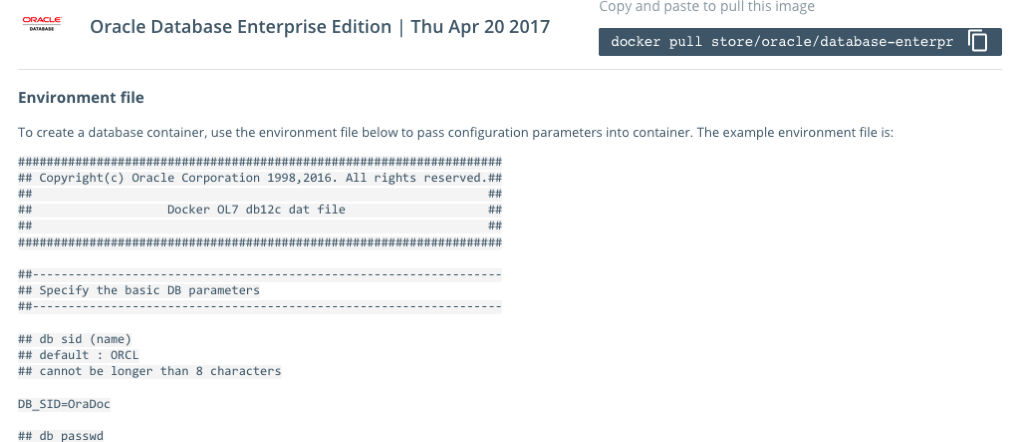

The first thing you need to do is to copy the sample Environment File. Create a new file on your laptop/desktop and paste the environment file contents into the file. There are a few edits you need to make to this file. The following is the edited/modified Environment file that I created and used. The changes are for DB_SID, DB_PASSWD and DB_DOMAIN.

#################################################################### ## Copyright(c) Oracle Corporation 1998,2016. All rights reserved.## ## ## ## Docker OL7 db12c dat file ## ## ## #################################################################### ##------------------------------------------------------------------ ## Specify the basic DB parameters ##------------------------------------------------------------------ ## db sid (name) ## default : ORCL ## cannot be longer than 8 characters DB_SID=ORCL ## db passwd ## default : Oracle DB_PASSWD=oracle ## db domain ## default : localdomain DB_DOMAIN=localdomain ## db bundle ## default : basic ## valid : basic / high / extreme ## (high and extreme are only available for enterprise edition) DB_BUNDLE=basic ## end

I called this file 'docker_ora_db.txt'

4. Download and Configure Oracle Database for Docker

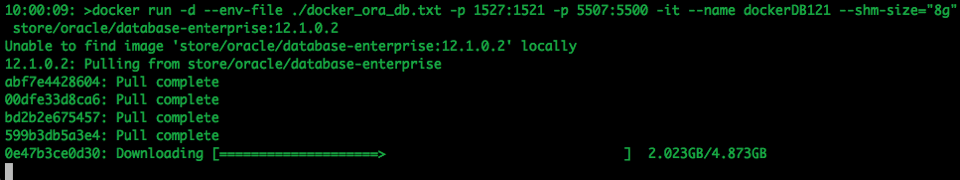

The following command will download and configure the docker image$ docker run -d --env-file ./docker_ora_db.txt -p 1527:1521 -p 5507:5500 -it --name dockerDB121 --shm-size="8g" store/oracle/database-enterprise:12.1.0.2

This command will create a container called 'dockerDB121'. The 121 at the end indicate the version number of the Oracle Database. If you end up with a number of containers containing different versions of the Oracle Database then you need some way of distinguishing them.

Take note of the port mapping in the above command, as you will need this information later.

When you run this command, the docker image will be downloaded from the docker website, will be unzipped and the container setup and ready to run.

5. Log-in and Finish the configuration

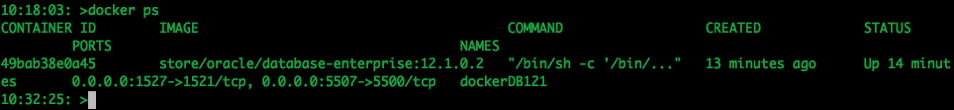

Although the docker container has been setup, there is still a database configuration to complete. The following images shows that the new containers is there.

To complete the Database setup, you will need to log into the Docker container.

docker exec -it dockerDB121 /bin/bash

Then run the Oracle Database setup and startup script (as the root user).

/bin/bash /home/oracle/setup/dockerInit.sh

This script can take a few minutes to run. On my laptop it took about 2 minutes.

When this is finished the terminal session will open as this script goes into a look.

To run any other commands in the container you will need to open another terminal session and connect to the Docker container. So go open one now.

6. Log into the Database in Docker

In a new terminal window, connect to the Docker container and then switch to the oracle user.

su - oracle

Check that the Oracle Database processes are running (ps -ef) and then connect as SYSDBA.

sqlplus / as sysdba

Let's check out the Database.

SQL> select name,DB_UNIQUE_NAME from v$database;

NAME DB_UNIQUE_NAME

--------- ------------------------------

ORCL ORCL

SQL> SELECT v.name, v.open_mode, NVL(v.restricted, 'n/a') "RESTRICTED", d.status

FROM v$pdbs v, dba_pdbs d

WHERE v.guid = d.guid

ORDER BY v.create_scn;

NAME OPEN_MODE RES STATUS

------------------------------ ---------- --- ---------

PDB$SEED READ ONLY NO NORMAL

PDB1 READ WRITE NO NORMAL

And the tnsnames.ora file contains the following:

ORCL = (DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = 0.0.0.0)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = ORCL.localdomain) ) )

PDB1 = (DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = 0.0.0.0)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = PDB1.localdomain) ) )

You are now up an running with an Docker container running an Oracle 12.1 Databases.

7. Configure SQL Developer (on Client) to access the Oracle Database on Docker

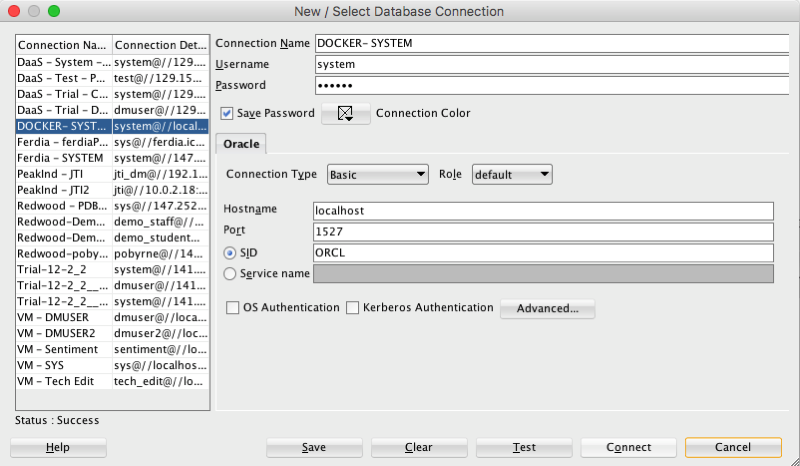

Remember that port number mapping I mentioned in step 4 above. See in this SQL Developer connection that the port number is 1527.

Thats it. How easy is that. You now have a fully configured Oracle 12.1c Enterprise Edition Database to play with, to have fun and to explore all the wonderful features of the Oracle Database.

Tuesday, April 18, 2017

ODM Model View Details Views in Oracle 12.2

A new feature for Oracle Data Mining in Oracle 12.2 is the new Model Details views.

In Oracle 11.2.0.3 and up to Oracle 12.1 you needed to use a range of PL/SQL functions (in DBMS_DATA_MINING package) to inspect the details of a data mining/machine learning model using SQL.

Check out these previous blog posts for some examples of how to use and extract model details in Oracle 12.1 and earlier versions of the database

Association Rules in ODM-Part 3

Extracting the rules from an ODM Decision Tree model

Instead of these functions there are now a lot of DB views available to inspect the details of a model. The following table summarises these various DB Views. Check out the DB views I've listed after the table, as these views might some some of the ones you might end up using most often.

I've now chance of remembering all of these and this table is a quick reference for me to find the DB views I need to use. The naming method used is very confusing but I'm sure in time I'll get the hang of them.

NOTE: For the DB Views I've listed in the following table, you will need to append the name of the ODM model to the view prefix that is listed in the table.

| Data Mining Type | Algorithm & Model Details | 12.2 DB View | Description |

|---|---|---|---|

| Association | Association Rules | DM$VR | generated rules for Association Rules |

| Frequent Itemsets | DM$VI | describes the frequent itemsets | |

| Transaction Itemsets | DM$VT | describes the transactional itemsets view | |

| Transactional Rules | DM$VA | describes the transactional rule view and transactional itemsets | |

| Classification | (General views for Classification models) | DM$VT DM$VC |

describes the target distribution for Classification models describes the scoring cost matrix for Classification models |

| Decision Tree | DM$VP DM$VI DM$VO DM$VM |

describes the DT hierarchy & the split info for each level in DT describes the statistics associated with individual tree nodes Higher level node description describes the cost matrix used by the Decision Tree build |

|

| Generalized Linear Model | DM$VD DM$VA |

describes model info for Linear Regres & Logistic Regres describes row level info for Linear Regres & Logistic Regres |

|

| Naive Bayes | DM$VP DM$VV |

describes the priors of the targets for Naïve Bayes describes the conditional probabilities of Naïve Bayes model |

|

| Support Vector Machine | DM$VL | describes the coefficients of a linear SVM algorithm | |

| Regression ??? | Doe | 80 | 50 |

| Clustering | (General views for Clustering models) | DM$VD DM$VA DM$VH DM$VR |

Cluster model description Cluster attribute statistics Cluster historgram statistics Cluster Rule statistics |

| k-Means | DM$VD DM$VA DM$VH DM$VR |

k-Means model description k-Means attribute statistics k-Means historgram statistics k-Means Rule statistics |

|

| O-Cluster | DM$VD DM$VA DM$VH DM$VR |

O-Cluster model description O-Cluster attribute statistics O-Cluster historgram statistics O-Cluster Rule statistics |

|

| Expectation Minimization | DM$VO DM$VB DM$VI DM$VF DM$VM DM$VP |

describes the EM components the pairwise Kullback–Leibler divergence attribute ranking similar to that of Attribute Importance parameters of multi-valued Bernoulli distributions mean & variance parameters for attributes by Gaussian distribution the coefficients used by random projections to map nested columns to a lower dimensional space |

|

| Feature Extraction | Non-negative Matrix Factorization | DM$VE DM$VI |

Encoding (H) of a NNMF model H inverse matrix for NNMF model |

| Singular Value Decomposition | DM$VE DM$VV DM$VU |

Associated PCA information for both classes of models describes the right-singular vectors of SVD model describes the left-singular vectors of a SVD model |

|

| Explicit Semantic Analysis | DM$VA DM$VF |

ESA attribute statistics ESA model features |

|

| Feature Section | Minimum Description Length | DM$VA | describes the Attribute Importance as well as the Attribute Importance rank |

Normalizing and Error Handling views created by ODM Automatic Data Processing (ADP)

- DM$VN : Normalization and Missing Value Handling

- DM$VB : Binning

Global Model Views

- DM$VG : Model global statistics

- DM$VS : Computed model settings

- DM$VW :Alerts issued during model creation

Each one of these new DB views needs their own blog post to explain what informations is being explained in each. I'm sure over time I will get round to most of these.

Monday, April 3, 2017

Managing memory allocation for Oracle R Enterprise Embedded Execution

When working with Oracle R Enterprise and particularly when you are using the ORE functions that can spawn multiple R processes, on the DB Server, you need to be very aware of the amount of memory that will be consumed for each call of the ORE function.

ORE has two sets of parallel functions for running your user defined R scripts stored in the database, as part of the Embedded R Execution feature of ORE. The R functions are called ore.groupApply, ore.rowApply and ore.indexApply. When using SQL there are "rqGroupApply" and rqRowApply. (There is no SQL function equivalent of the R function ore.indexApply)

For each parallel R process that is spawned on the DB server a certain amount of memory (RAM) will be allocated to this R process. The default size of memory to be allocated can be found by using the following query.

select name, value from sys.rq_config; NAME VALUE ----------------------------------- ----------------------------------- VERSION 1.5 MIN_VSIZE 32M MAX_VSIZE 4G MIN_NSIZE 2M MAX_NSIZE 20M

The memory allocation is broken out into the amount of memory allocated for Cells and NCells for each R process.

If your parallel ORE function create a large number of parallel R processes then you can see that the amount of overall memory consumed can be significant. I've seen a few customers who very quickly run out of memory on their DB servers. Now that is something you do not want to happen.

How can you prevent this from happening ?

There are a few things you need to keep in mind when using the parallel enabled ORE functions. The first one is, how many R processes will be spawned. For most cases this can be estimated or calculated to a high degree of accuracy. Secondly, how much memory will be used to process each of the R processes. Thirdly, how memory do you have available on the DB server. Fourthly, how many other people will be running parallel R processes at the same time?

Examining and answering each of these may look to be a relatively trivial task, but the complexity behind these can increase dramatically depending on the answer to the fourth point/question above.

To calculate the amount of memory used during the ORE user defined R script, you can use the R garbage function to calculate the memory usage at the start and at the end of the R script, and then return the calculated amount. Yes you need to add this extra code to your R script and then remove it when you have calculated the memory usage.

gc.start <- gc(reset=TRUE) ... gc.end <- gc() gc.used <- gc.end[,7] - gc.start[,7] # amount consumed by the processing

Using this information and the answers to the points/questions I listed above you can now look at calculating how much memory you need to allocated to the R processes. You can set this to be static for all R processes or you can use some code to allocate the amount of memory that is needed for each R process. But this starts to become messy. The following gives some examples (using R) of changing the R memory allocations in the Oracle Database. Similar commands can be issued using SQL.

> sys.rqconfigset('MIN_VSIZE', '10M') -- min heap 10MB, default 32MB

> sys.rqconfigset('MAX_VSIZE', '100M') -- max heap 100MB, default 4GB

> sys.rqconfigset('MIN_NSIZE', '500K') -- min number cons cells 500x1024, default 1M

> sys.rqconfigset('MAX_NSIZE', '2M') -- max number cons cells 2M, default 20M

Some guidelines - as with all guidelines you have to consider all the other requirements for the Database, and in reality you will have to try to find a balance between what is listed here and what is actually possible.

- Set parallel_degree_policy to MANUAL.

- Set parallel_min_servers to the number of parallel slave processes to be started when the database instances start, this avoids start up time for the R processes. This is not a problem for long running processes. But can save time with processes running for 10s seconds

- To avoid overloading the CPUs if the parallel_max_servers limit is reached, set the hidden parameter _parallel_statement_queuing to TRUE. Avoids overloading and lets processes wait.

- Set application tables and their indexes to DOP 1 to reinforce the ability of ORE to determine when to use parallelism.

Understanding the memory requirements for your ORE processes can be tricky business and can take some time to work out the right balance between what is needed by the spawned parallel R processes and everything else that is going on in the Database. There will be a lot of trial and error in working this out and it is always good to reach out for some help. If you have a similar scenario and need some help or guidance let me know.

Wednesday, March 29, 2017

OUG Ireland 2017 Presentation

Monday, March 13, 2017

Presentations from OUGN17

Friday, March 3, 2017

Blog posts on Oracle Advanced Analytics features in 12.2

A couple of days ago Oracle finally provided us with an on-premises download for Oracle 12.2 Database.

Go and download load it from here

or

Download the Database App Development VM with 12.2 (This is what I did)

Over the past couple of months I've been using the DBaaS of 12.2, trying out some of the new Advanced Analytics option new features, and other new features. Here are the links to the blog posts on these new 12.2 new features. There will be more coming over the next few months.

New OAA features in Oracle 12.2 Database

Explicit Semantic Analysis in Oracle 12.2c Database

Explicit Semantic Analysis setup using SQL and PL/SQL

and slightly related is the new SQL Developer 4.2

Monday, February 13, 2017

Join the Oracle Scene Editorial Team

Are you a member of UKOUG?

How would you like to join the editorial team of Oracle Scene magazine as a deputy editor?

If you are interested we are looking to recruit 1 deputy editor to cover the Applications area and 2 deputy editors to cover the Tech area (DBA, Developer, BA, etc)

How much time is required? about 4 hours per edition, or maybe less.

What does a deputy editor do?

As part of the editorial team you will be expected to:

- Article Review

Articles submitted are uploaded to the review panel on Basecamp. During this time the editors should become familiar with the articles and have an idea of which ones would be appropriate for publication. Time approx 1.5hrs over a 2 week period.

- Editorial Call

After the review period has closed the editors come together for an editorial call (approx 1hr) to go through the feedback received on the articles, it is the editors job to validate any comments and select which articles should be chosen for publication. Time approx 1hr.

Some articles may need further rework by the authors and the editors provide comments & instructions as to the amends needed, in some cases the editors will take on the amends themselves or if they hold the relationship with the author they may wish to approach them direct. If any articles have been held over from the previous edition, the editors will relook at the articles and if any of the content needs to be updated they will advise. If we do not have articles submitted at this stage so the editors may need to source some additional content.

- Editorial Review

Once the selected articles are edited they are passed to the designer for layout, editors will then receive a first copy of the magazine where they will read the articles relevant to them (Apps or Tech) marking up on the pdf any errors in the text or images found. We try to build in time over a weekend for this with the comments due by 9am on the Monday. This is generally the last time the editors see the magazine, the next time being the digital version. Time approx 2hrs.

- Promotion

When the digital version is ready to be sent out – the editors & review panel are notified to help raise awareness of the magazine among their network.

- Article Sourcing

Call for articles is open all year as we will just hold those submitted in between the planning timeline over to the next edition. If there are particular topics that we feel would make good articles the editors are expected to help source potential authors and of course if they see good presentations again encourage those speakers to turn their presentation in to text.

- Flying the flag

Throughout the year the editors are expected to positively “fly the flag” of Oracle Scene, as a volunteer this will include, at the annual conference, taking part in the community networking to encourage future authors amongst the community.

If you are interested in a deputy editor role then submit your application now.

Check out UKOUG webpage for more details.

Monday, January 30, 2017

Slides from the OUG Ireland meet-ups

I've finally gotten the time (and the permissions from the presenters) to make the slides from the first two OUG Ireland meet-ups available.

I've posted them on SlideShare and I've embedded them in this blog post too.

Thursday, January 26, 2017

Formatting results from ORE script in a SELECT statement

This blog post looks at how to format the output or the returned returns from an Oracle R Enterprise (ORE), user defined R function, that is run using a SELECT statement in SQL.

Sometimes this can be a bit of a challenge to work out, but it can be relatively easy once you have figured out how to do it. The following examples works through some scenarios of different results sets from a user defined R function that is stored in the Oracle Database.

To run that user defined R function using a SELECT statement I can use one of the following ORE SQL functions.

- rqEval

- rqTableEval

- "rqGroupEval"

- rqRowEval

For simplicity we will just use the first of these ORE SQL functions to illustrate the problem and how to go about solving it. The rqEval ORE SQL function is a generate purpose function to call a user defined R script stored in the database. The function does not require any input data set and but it will return some data. You could use this to generate some dummy/test data or to find some information in the database. Here is noddy example that returns my name.

BEGIN

--sys.rqScriptDrop('GET_NAME');

sys.rqScriptCreate('GET_NAME',

'function() {

res<-data.frame("Brendan")

res

} ');

END;

To call this user defined R function I can use the following SQL.

select *

from table(rqEval(null,

'select cast(''a'' as varchar2(50)) from dual',

'GET_NAME') );

For text strings returned you need to cast the returned value giving a size.

If we have a numeric value being returned we can don't have to use the cast and instead use '1' as shown in the following example. This second example extends our user defined R function to return my name and a number.

BEGIN

sys.rqScriptDrop('GET_NAME');

sys.rqScriptCreate('GET_NAME',

'function() {

res<-data.frame(NAME="Brendan", YEAR=2017)

res

} ');

END;

To call the updated GET_NAME function we now have to process two returned columns. The first is the character string and the second is a numeric.

select *

from table(rqEval(null,

'select cast(''a'' as varchar2(50)) as "NAME", 1 AS YEAR from dual',

'GET_NAME') );

These example illustrate how you can process character strings and numerics being returned by the user defined R script.

The key to setting up the format of the returned values is knowing the structure of the data frame being returned by the user defined R script. Once you know that the rest is (in theory) easy.

Monday, January 23, 2017

Oracle Data Miner 4.2 New Features

Oracle Data Miner 4.2 (part of SQL Developer 4.2) got released as an Early Adopter versions (EA) a few weeks ago.

With the new/updated Oracle Data Miner (ODMr) there are a number of new features. These can be categories as 1) features all ODMr users can use now, 2) New features that are only usable when using Oracle 12.2 Database, and 3) Updates to existing algorithms that have been exposed via the ODMr tool.

The following is a round up of the main new features you can enjoy as part of ODMr 4.2 (mainly covering points 1 and 2 above)

- You can now schedule workflows to run based on a defined schedule

- Support for additional data types (RAW, ROWID, UROWID, URITYPE)

- Better support for processing JSON data in the JSON Query node

- Additional insights are displayed as part of the Model Details View

- Additional alert monitoring and reporting

- Better support for processing in-memory data

- A new R Model node that allows you to include in-database ORE user defined R function to support model build, model testing and applying of new model.

- New Explicit Semantic Analysis node (Explicit Feature Extraction)

- New Feature Compare and Test nodes

- New workflow status profiling perfoance improvements

- Refresh the input data definition in nodes

- Attribute Filter node now allows for unsupervised attribute importance ranking

- The ability to build Partitioned data mining models

Look out for the blog posts on most of these new features over the coming months.

WARNING: Most of these new features requires an Oracle 12.2 Database.

Monday, January 16, 2017

Explicit Semantic Analysis setup using SQL and PL/SQL

In my previous blog post I introduced the new Explicit Semantic Analysis (ESA) algorithm and gave an example of how you can build an ESA model and use it. Check out this link for that blog post.

In this blog post I will show you how you can manually create an ESA model. The reason that I'm showing you this way is that the workflow (in ODMr and it's scheduler) may not be for everyone. You may want to automate the creation or recreation of the ESA model from time to time based on certain business requirements.

In my previous blog post I showed how you can setup a training data set. This comes with ODMr 4.2 but you may need to expand this data set or to use an alternative data set that is more in keeping with your domain.

Setup the ODM Settings table

As with all ODM algorithms we need to create a settings table. This settings table allows us to store the various parameters and their values, that will be used by the algorithm.

-- Create the settings table

CREATE TABLE ESA_settings (

setting_name VARCHAR2(30),

setting_value VARCHAR2(30));

-- Populate the settings table

-- Specify ESA. By default, Naive Bayes is used for classification.

-- Specify ADP. By default, ADP is not used. Need to turn this on.

BEGIN

INSERT INTO ESA_settings (setting_name, setting_value)

VALUES (dbms_data_mining.algo_name,

dbms_data_mining.algo_explicit_semantic_analys);

INSERT INTO ESA_settings (setting_name, setting_value)

VALUES (dbms_data_mining.prep_auto,dbms_data_mining.prep_auto_on);

INSERT INTO ESA_settings (setting_name, setting_value)

VALUES (odms_sampling,odms_sampling_disable);

commit;

END;

These are the minimum number of parameter setting needed to run the ESA algorithm. The other ESA algorithm setting include:

Setup the Oracle Text Policy

You also need to setup an Oracle Text Policy and a lexer for the Stopwords.

DECLARE

v_policy_name varchar2(30);

v_lexer_name varchar2(3)

BEGIN

v_policy_name := 'ESA_TEXT_POLICY';

v_lexer_name := 'ESA_LEXER';

ctx_ddl.create_preference(v_lexer_name, 'BASIC_LEXER');

v_stoplist_name := 'CTXSYS.DEFAULT_STOPLIST'; -- default stop list

ctx_ddl.create_policy(policy_name => v_policy_name, lexer => v_lexer_name, stoplist => v_stoplist_name);

END;

Create the ESA model

Once we have the settings table created with the parameter values set for the algorithm and the Oracle Text policy created, we can now create the model.

To ensure that the Oracle Text Policy is applied to the text we want to analyse we need to create a transformation list and add the Text Policy to it.

We can then pass the text transformation list as a parameter to the CREATE_MODEL, procedure.

DECLARE

v_xlst dbms_data_mining_transform.TRANSFORM_LIST;

v_policy_name VARCHAR2(130) := 'ESA_TEXT_POLICY';

v_model_name varchar2(50) := 'ESA_MODEL_DEMO_2';

BEGIN

v_xlst := dbms_data_mining_transform.TRANSFORM_LIST();

DBMS_DATA_MINING_TRANSFORM.SET_TRANSFORM(v_xlst, '"TEXT"', NULL, '"TEXT"', '"TEXT"', 'TEXT(POLICY_NAME:'||v_policy_name||')(MAX_FEATURES:3000)(MIN_DOCUMENTS:1)(TOKEN_TYPE:NORMAL)');

DBMS_DATA_MINING.DROP_MODEL(v_model_name, TRUE);

DBMS_DATA_MINING.CREATE_MODEL(

model_name => v_model_name,

mining_function => DBMS_DATA_MINING.FEATURE_EXTRACTION,

data_table_name => 'WIKISAMPLE',

case_id_column_name => 'TITLE',

target_column_name => NULL,

settings_table_name => 'ESA_SETTINGS',

xform_list => v_xlst);

END;