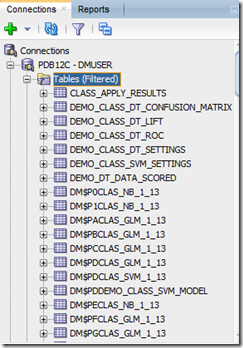

If you have been using Oracle Data Miner, that is part of SQL Developer 4 or SQL Developer 3, you will notice that your schema can get filled up with various tables that are created by your workflows. The following image gives an example.

These tables can include details of the various algorithms used and their settings, sample tables that were created using the various nodes, etc. Basically they contain all the information that was setup by each node. Not every node in your workflow will create a table, but a lot do in particular if you have set the Cache or Sample in the Properties tab.

In most cases you do not need to be aware of or use most of these tables.

So How do I hide them, so that my schema table listing only shows me the main tables in my schema ? By main tables, I mean the tables that you would expect to have in your schema before you started using Oracle Data Miner.

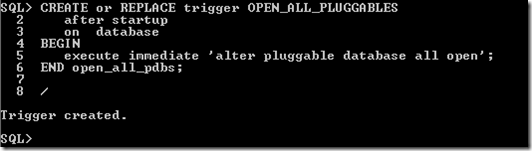

The answer to this question is to apply filters to your tables in SQL Developer. To do this go to your schema in the Connections tab. Expand to get the full list of schema objects and then right click on Tables. You should get a menu like the following.

Select Apply Filter from the menu and the Apply Filter window will open. Here you can create filters to apply to the tables in your schema.

To restrict Oracle Data Miner related table you will need to exclude tables that begin with, DM$ and ODMR$. The following image shows these filters.

When these filters are applied we only get our schema tables.

There are two additional filters you may want to consider. The first of these is for the tables that begin with OUTPUT. These are tables that are created when you build a node sends the outputs from running a model to a table, or some other scenario where the output is sent to a table. In reality this is bad naming and we should use a name that is more meaningful, and reflects the contents of the table. But sometimes you just want to spool the outputs to a table and the name is not important. I have an additional filter to not show these tables (see below).

With SQL Developer 4, Oracle Data Miner seems to generate IOTs, as we can see in the above image. Again another filter can be created to exclude these from the list.

Here is the full list of filters.