You have heard about the cloud? Right? Even the Oracle Cloud?

If you haven't, then maybe we need to look at how you can learn more about the Oracle Cloud.

Over the past while, and in the past few weeks in particular, Oracle has been advertising about how you can get a trail Oracle cloud service setup for FREE. Well it is free for one month when you set it up on the Oracle website (cloud.oracle.com).

As I like to talk about and use the Oracle Advanced Analytics (OAA) option (a lot), I thought I'd just give you some pointers on how to use OAA on the Oracle cloud.

To do this you need to set up an account on the Oracle cloud website (your Oracle single sign on should help with making that process a lot quicker). There are lots of websites and blog that will talk/show you through the process. Then you need to select what Database as a Service that you want to setup

OAA is not available on the Database Schema Service just yet (maybe one day they will)

Although Oracle Advanced Analytics comes pre-installed in the Oracle Enterprise Edition database (yes it is a separately priced option) when you install it on your own servers, but for the Enterprise Edition DaaS OAA is not part of it.

DaaS has the following versions

- Standard Edition Service

- Enterprise Edition Service

- High Performance Service

- Extreme Performance Service

OAA is only available for these last two versions of the DaaS.

High Performance DaaS: Multitenant, Partitioning, Real Application Testing, Advanced Compression, Advanced Security, Label Security, Database Vault, OLAP, Advanced Analytics, Spatial and Graph, Diagnostics Pack, Tuning Pack, Database Lifecycle Management Pack, Data Masking & Subsetting Pack and Cloud Management Pack for Oracle Database.

Extreme Performance DaaS: In-Memory Database, RAC (Real Application Clusters), Active Data Guard, Multitenant, Partitioning, Real Application Testing, Advanced Compression, Advanced Security, Label Security, Database Vault, OLAP, Advanced Analytics, Spatial and Graph, Diagnostics Pack, Tuning Pack, Database Lifecycle Management Pack, Data Masking & Subsetting Pack and Cloud Management Pack for Oracle Database.

Oracle Advanced Analytics has two main products or components. The first is the in-database Oracle Data Mining features. This are part of the High Performance and Extreme Performance DaaS offerings. But Oracle R Enterprise is not installed on these DaaS. Well if kind of is if you can get an 11g DaaS, but at time of writing this post ORE is not part of the 12c DaaS images. So you will need to factor in some time to go and install ORE, if you need to use it.

I've been lucky to have one of these DaaS with OAA trials and with thanks to Thomas Kurian he has extended these trials to 12 months for all Oracle ACE Directors. Thank you Thomas.

When you get your DaaS setup you just need to configure your connection privileges, ssh, etc and away you go. All you need to do is to move your data across the internet to your own Oracle DaaS, and once it is in the DaaS all your OAA and other analytics is performed on the Database Server. Only the results are returned to you and displayed in your tool. This significantly reduces the processing time for your data and removes the need to constantly extract your data (in whole or in parts) to feed into other advanced analytics tools.

So if you haven't tried Oracle Advanced Analytics yet, then go ahead and setup your free trial of Oracle DaaS and try it out. You never know what you might discover by using Oracle Advanced Analytics (in the cloud)

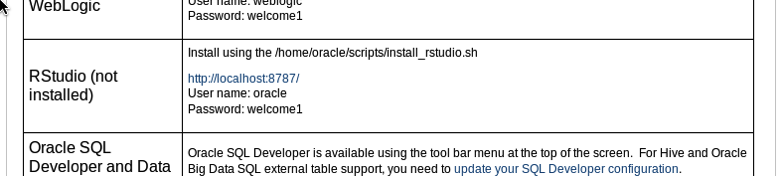

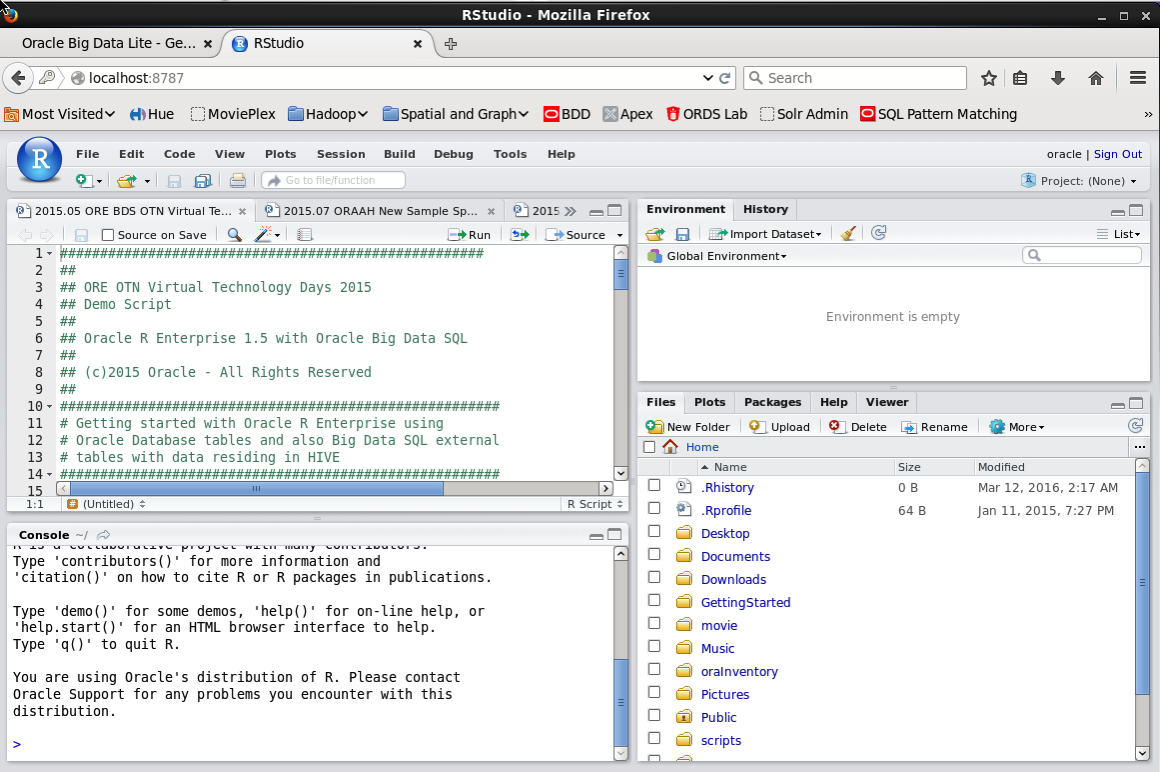

Log in using your Server username and password. This is oracle/oracle on the VM.

Log in using your Server username and password. This is oracle/oracle on the VM.