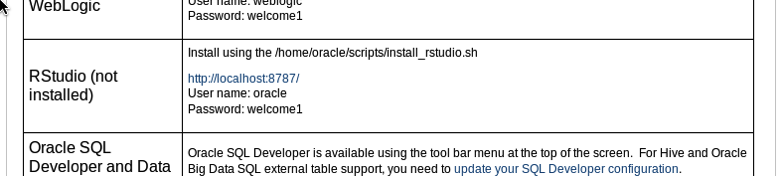

In a previous blog post I showed how you can install and get started with using RStudio on a server by using RStudio Server. My previous post showed how you could do that on the Oracle BigDataLite VM. On this VM everything was nicely scripted and set up for you. But when it comes to installing it on a different server, well things can be a bit different.

The purpose of this blog post is to go through the install steps you need to follow on your own server or Oracle Database server. The following is based on a server that is setup with Oracle Linux. (I'm actually using the Oracle DB Developer VM).

1. Download the latest version of RStudio Server.

Use the following link to download RStudio Server. But do a quick check on the RStudio server to get the current version number.

wget https://download2.rstudio.org/rstudio-server-rhel-0.99.892-x86_64.rpm

The following shows you what you will see when you run this command.

--2016-03-16 06:22:30-- https://download2.rstudio.org/rstudio-server-rhel-0.99.892-x86_64.rpm Resolving download2.rstudio.org (download2.rstudio.org)... 54.192.28.107, 54.192.28.54, 54.192.28.12, ... Connecting to download2.rstudio.org (download2.rstudio.org)|54.192.28.107|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 38814908 (37M) [application/x-redhat-package-manager] Saving to: ‘rstudio-server-rhel-0.99.892-x86_64.rpm’ 100%[============================================================>] 38,814,908 6.54MB/s in 6.0s 2016-03-16 06:22:37 (6.17 MB/s) - ‘rstudio-server-rhel-0.99.892-x86_64.rpm’ saved [38814908/38814908]

2. Install RStudio Server

sudo yum install --nogpgcheck rstudio-server-rhel-0.99.892-x86_64.rpm

when prompted if it is OK to install, enter y (highlighted in bold below)

Loaded plugins: langpacks

Examining rstudio-server-rhel-0.99.892-x86_64.rpm: rstudio-server-0.99.892-1.x86_64

Marking rstudio-server-rhel-0.99.892-x86_64.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package rstudio-server.x86_64 0:0.99.892-1 will be installed

--> Finished Dependency Resolution

ol7_UEKR3/x86_64 | 1.2 kB 00:00:00

ol7_addons/x86_64 | 1.2 kB 00:00:00

ol7_latest/x86_64 | 1.4 kB 00:00:00

ol7_optional_latest/x86_64 | 1.2 kB 00:00:00

Dependencies Resolved

===========================================================================================================

Package Arch Version Repository Size

===========================================================================================================

Installing:

rstudio-server x86_64 0.99.892-1 /rstudio-server-rhel-0.99.892-x86_64 280 M

Transaction Summary

===========================================================================================================

Install 1 Package

Total size: 280 M

Installed size: 280 M

Is this ok [y/d/N]: y

Downloading packages:

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : rstudio-server-0.99.892-1.x86_64 1/1

groupadd: group 'rstudio-server' already exists

rsession: no process found

ln -s '/etc/systemd/system/rstudio-server.service' '/etc/systemd/system/multi-user.target.wants/rstudio-server.service'

rstudio-server.service - RStudio Server

Loaded: loaded (/etc/systemd/system/rstudio-server.service; enabled)

Active: active (running) since Wed 2016-03-16 10:46:00 PDT; 1s ago

Process: 3191 ExecStart=/usr/lib/rstudio-server/bin/rserver (code=exited, status=0/SUCCESS)

Main PID: 3192 (rserver)

CGroup: /system.slice/rstudio-server.service

├─3192 /usr/lib/rstudio-server/bin/rserver

└─3205 /usr/lib64/R/bin/exec/R --slave --vanilla -e cat(R.Version()$major,R.Version()$minor,~+~sep=".")

Mar 16 10:46:00 localhost.localdomain systemd[1]: Started RStudio Server.

Verifying : rstudio-server-0.99.892-1.x86_64 1/1

Installed:

rstudio-server.x86_64 0:0.99.892-1

Complete!

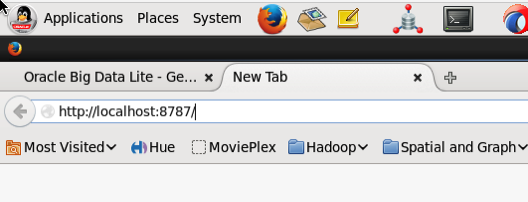

3. Open RStudio using a web browser.

Open your favourite web browser and put in the host name or the IP address of your server. In my example I'm using the Oracle DB Developer VM to demonstrate the install, so I can use localhost, followed by the port number for RStudio Server.

Log in using your Server username and password. This is oracle/oracle on the VM.

Log in using your Server username and password. This is oracle/oracle on the VM.

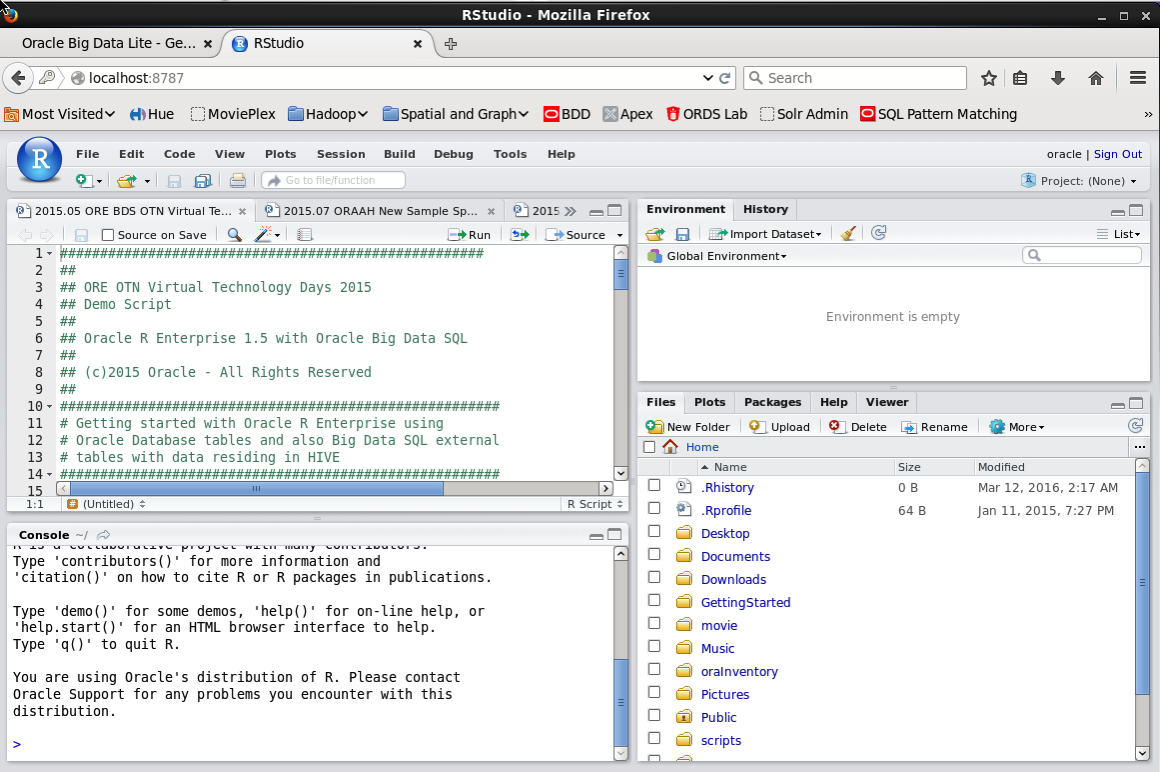

4. Use and Enjoy

If you get logged into RStudio Server then you will see a screen something like the following!

Job Done and Enjoy!

5. An Extra Step is using the Oracle DB Developer VM

If you want to use RStudio on the Oracle DB Developer VM from your local OS, then you will need to open the port 8787 on the VM. To do this power down the VM, if you have it open. The open the Network section of the VM settings. I'm using VirtualBox. And then click on the Port Forwarding.

Click on OK to save your Port Forwarding setting and then click on the OK button again to close the Network settings for the VM.

Now start up the VM. When it has loaded and you have the desktop displayed in the VM window, you should now be able to connect to RStudio in the VM, from your local machine.

To do this open your web browser on your local machine and type in

http://localhost:8787

You should now get the RStudio login in screen that is shown in point 3 above. Go ahead, login and enjoy.

6. A little warning

Make sure to log out of RStudio when you are finished using it. If you don't then your R environment may not have been saved and you will get a message when you log in next. Now we don't want that happenings, so just log out of RStudio. You can do that by looking at the top right hand corner of the RStudio Server application.

I will have one more blog post on how you can configure RStudion Server to work with an Oracle Database server that has Oracle R Enterprise installed.