In my previous posts I gave sample code of how you can use your ODM model to score new data.

Applying an ODM Model to new data in Oracle – Part 2

Applying an ODM Model to new data in Oracle – Part 1

The examples given in this previous post were based on the new data being in a table.

In some scenarios you may not have the data you want to score in table. For example you want to score data as it is being recorded and before it gets committed to the database.

The format of the command to use is

prediction(ODM_MODEL_NAME USING <list of values to be used and what the mode attribute they map to>)

prediction_probability(ODM_Model_Name, Target Value, USING <list of values to be used and what model attribute they map to>)

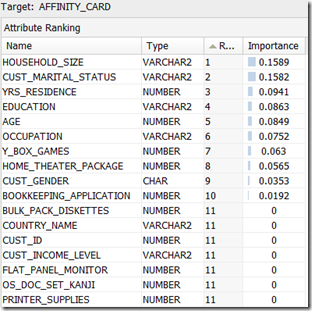

So we can list the model attributes we want to use instead of using the USING * as we did in the previous blog posts

Using the same sample data that I used in my previous posts the command would be:

Select prediction(clas_decision_tree

USING

20 as age,

'NeverM' as cust_marital_status,

'HS-grad' as education,

1 as household_size,

2 as yrs_residence,

1 as y_box_games) as scored_value

from dual;

SCORED_VALUE

------------

0

Select prediction_probability(clas_decision_tree, 0

USING

20 as age,

'NeverM' as cust_marital_status,

'HS-grad' as education,

1 as household_size,

2 as yrs_residence,

1 as y_box_games) as probability_value

from dual;

PROBABILITY_VALUE

-----------------

1

So we get the same result as we got in our previous examples.

Depending of what data we have gathered we may or may not have all the values for each of the attributes used in the model. In this case we can submit a subset of the values to the function and still get a result.

Select prediction(clas_decision_tree

USING

20 as age,

'NeverM' as cust_marital_status,

'HS-grad' as education) as scored_value2

from dual;

SCORED_VALUE2

-------------

0

Select prediction_probability(clas_decision_tree, 0

USING

20 as age,

'NeverM' as cust_marital_status,

'HS-grad' as education) as probability_value2

from dual;

PROBABILITY_VALUE2

------------------

1

Again we get the same results.