Before beginning any data mining task we need to performs some data investigation. This will allow us to explore the data and to gain a better understanding of the data values. We can discover a lot by doing this can it can help us to identify areas for improvement in the source applications, as well as identifying data that does not contribute to our business problem (this is called feature reduction), and it can allow us to identify data that needs reformatting into a number of additional features (feature creation). A simple example of this is a date of birth field provides no real value, but by creating a number of additional attributes (features) we can now use the date of birth field to determine what age group they fit into.

As with most of the interface in Oracle Data Miner 11gR2, there is a new Data Exploration interface. In this blog post I will talk you through how to set-up and use the new Data Exploration interface and show you how you can use the data exploration features to gain an understanding of the data before you begin using the data mining algorithms.

The examples given here are based on my previous blog posts and we will use the same sample data sets, that were set-up as part of the install and configuration.

See my other blog post and videos on installing and setting up Oracle Data Miner.

Data Set-up

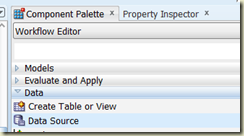

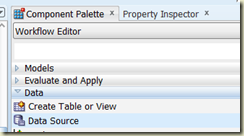

Before we can begin the data exploration we need to identify data we are going to use. To do this we need to select the Data tab from the Component Palette, and then select Data Source.

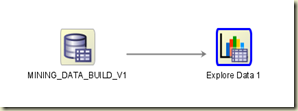

To create the Data Node on our Workflow we need to click and drag the Data Source onto the workflow. Select the MINING_DATA_BUILD_V and select all the data.

The next step is to create the Explore Data node on our workflow. From the Data tab in the Component Palette, select and drag the Explore Data node onto the workflow. Now we need to link the Data node to the Explore Data node.

Right-click on the Explore Data mode and click Run. This will make the ODM tool go to the database and analyse the data that is specified in our Data node. The analyse results will be used in the Explore Data note.

Exploring the Data

When the Explore Data node has finished we can look at the data it has generated. Right-click the Explore Data node and select View Data.

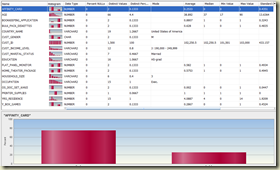

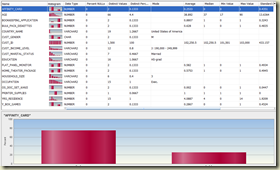

A lot of statistical information has been generated for each of the attributes in our Data node. In addition to the statistical information we also get a histogram of the attribute distributions.

We can work through each attribute taking the statistical data and the histograms to build up a picture of the data.

The data we are using is for an Electronics Goods store.

A few interesting things in the data are:

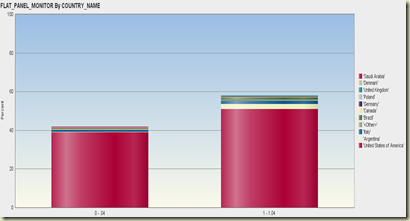

- 90% of the data comes from the United States of America

- PRINTER_SUPPLIES attribute only has one value. We can eliminate this from our data set as it will not contribute to the data mining algorithms

- Similarly for OS_DOC_SET_KENJI, which also has one one value

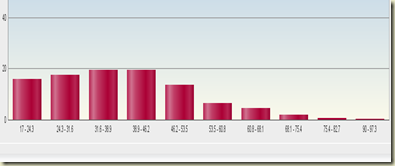

The histograms are based on predetermined number of bins. This is initially set to 10, but you may need to changed this value up or down to see if a pattern exists in the data.

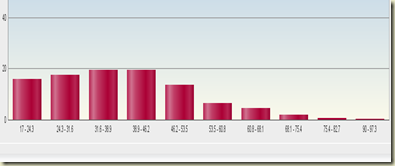

An example of this is if we select AGE and set the number of bins to 10. We get a nice histogram showing that most of our customers are in the 31 to 46 age ranges. So maybe we should be concentrating on these.

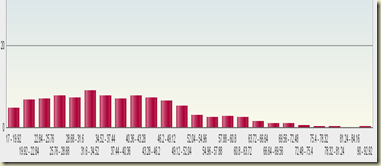

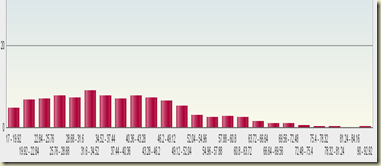

Now if we change the number of bins to 30 can get a completely different picture of what is going on in the data.

To change the number of bin we need to go to the Workflow pane and select the Property Inspector. Scroll down to the Histogram section and change the Numerical Bins to 25. You then need to rerun the Explore Data node.

Now we can see that there are a number of important age groups what stand out more than others. If we look at the 31 to 46 age range, in the first histogram we can see that there is not much change between each of the age bins. But when we look at the second histogram for the 25 bins for the same 21 to 34 age range we get a very different view of the data. In this second histogram we see that that the ages of the customers vary a lot. What does mean. Well it can mean lots of different things and it all depends on the business scenario. In our example we are looking at an electronic goods store. What we can deduce from this second histogram is that there are a small number of customers up to about age 23. Then there is an increase. Is this due to people having obtained their main job after school having some disposable income. This peak is followed by a drop off in customers followed by another peak, drop off, peak, drop off etc. Maybe we can build a profile of our customer based on their age just like what our financial organisations due to determine what products to sell to use based on our age and life stage.

Conclusions on the data

From this histogram we can maybe categorise the customers into the follow

• Early 20s – out of education, fist job, disposable income

• Late 20s to early 30s – settling down, own home

• Late 30s – maybe kids, so have less disposable income

• 40s – maybe people are trading up and need new equipment. Or maybe the kids have now turned into teenagers and are encouraging their parents to buy up todate equipment.

• Late 50s – These could be empty nesters where their children have left home, maybe setting up home by themselves and their parents are building things for their home. Or maybe the parents are treating themselves with new equipment as they have more disposable income

• 60s + – parents and grand-parents buying equipment for their children and grand-children. Or maybe we have very techie people who have just retired

• 70+ – we have a drop off here.

As you can see we can discover a lot in the day by changing the number of bins and examining the data. The important part of this examination is trying to relate what you are seeing from the graphical representation of the data on the screen, back to the type of business we are examining. A lot can be discovered but you will have to spend some time looking for it.

ODM 11gR2 Extra Data Exploration Functionality

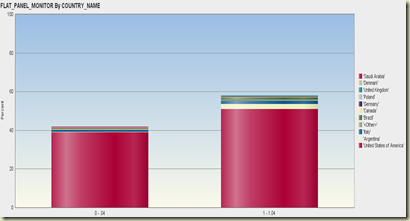

In ODM 11gR2 we now have an extra feature for our data analysis feature. We can now produce the histograms that are grouped by one of the other attributes. Typically this would be the Target or Class attribute but you can also use it with the other attributes.

To set this extra feature, double click on the Explore Data node. The Group By drop down lets you to select the attribute you want to group the other attributes by.

Using our example data, the target variable is AFFINITY_CARD. Select this in the drop down and run the Explore Data node again. When you look at the newly generated histograms you will now see each bin has two colours. If you hover the mouse of each coloured part you will be able to get the number of records in each group. You can use other attributes, such as the CUST_GENDER, COUNTRY_NAME, etc. Only use the attributes where it would make sense to analyse the data by.

This is a powerful new feature that allows you to gain a deeper level of insight into the data you are analysing

Brendan Tierney