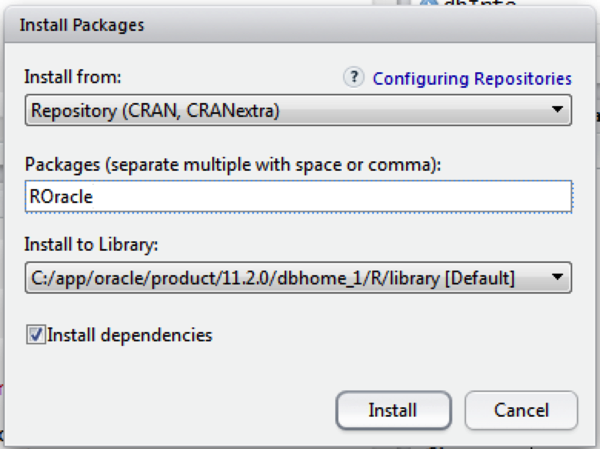

After you have installed I ORE on your client and server (see my previous blog posts on these), you are not ready to start getting your hand dirty with ORE. During the installation of ORE you setup a test schema in your database to test that you can make a connection. But you will not be using this schema for you ORE work. Typically you will want to use one of your existing schemas that contains the data in the relevant tables and views. Plus you will want to be able to create some tables, creates some ORE temporary objects and stores, and to be able to store some of you ORE scripts in the schema that contains the data. In a previous blog post I gave some steps needed to setup your schema to be able to use ORE and the embedded feature.

In this post I want to show you some of the commands you will need to use to get connected to your Oracle schema and some initial commands you will need to know an use.

ore.connect command

The first command that you need to use is the ore.connect command that allows you to establish a connection to a database schema. You can also use this command to connect to HIVE tables in a Hadoop cluster.

The general syntax of the ore.connect function is

ore.connect(user = "", sid = "", host = "localhost", password = "",

port = 1521, service_name = NULL, conn_string = NULL,

all = FALSE, type = c("ORACLE", "HIVE"))

From this list of available parameters you only need to specify some of them. For a basic connection you do not need to specify the conn_string, type and all. The following is an example of using this connection string to connect to a schema called DMUSER that is located in a database whose service_name is ORCL and the host is the localhost.

> ore.connect(user="dmuser", sid="orcl", host="localhost", password="dmuser", port=1521, all=TRUE);

> ore.ls()

[1] "DEMO_R_APPLY_RESULT" "DEMO_R_TABLE" "DEMO_SUBSET_TABLE" "INSUR_CUST_LTV_SAMPLE"

[5] "MINING_DATA_APPLY" "MINING_DATA_APPLY_V" "MINING_DATA_BUILD_V" "MINING_DATA_TEST_V"

[9] "MINING_DATA_TEXT_APPLY_V" "MINING_DATA_TEXT_BUILD_V" "MINING_DATA_TEXT_TEST_V"

I generally explicitly include the all=TRUE. The reason for this might become clear below when I show the alternative.

When you us the all=TRUE, the ore.connect function will also run the ore.sync and a ore.attach functions. This will result in synchronizing and attaching all tables and views in the ORE schema. The amount of time to run ore.sync grows linearly with the number of visible tables and views.

If you already have an ORE connection open and you try to establish a new ORE connection then your already existing ORE connection will be automatically disconnected. So you will need to be careful with the sequencing of your ORE code.

You schema might have lots and lots of object. As you work on building your advanced analytics environment you will end up build many more objects. You can imagine that over time every time you establish a connection it will start to take longer and longer. The following commands creates a connection to the schema, but this time it does not sync or attach the database objects, as shown using the

ore.ls function.

> ore.connect(user="dmuser", sid="orcl", host="localhost", password="dmuser", port=1521, all=FALSE);

> ore.ls()

character(0)

If you use the all=FALSE like is shown in the above example you will need to issue a ore.sync function to synchronise the meta-data and then the ore.attach function to add the synchronised objects to the search space of the local R environment.

is.ore.connected

You can use this command to check that you are connected to your ORE connection is live or not. As you develop your ORE scripts you might build in various connection and disconnections. This command is very useful to check your current status. If you have an ORE connection then you will get a response of TRUE. If you don't have an open ORE connection then you will get a response of FALSE.

> ore.is.connected()

[1] FALSE

After we establish our ORE connection the next time we run this command

> ore.is.connected()

[1] TRUE

The following commands can be used to check to see if we have a connection and if not then establish a connection and list the objects in the schema.

> if (!ore.is.connected())

ore.connect(user="dmuser", sid="orcl", host="localhost", password="dmuser", port=1521, all=TRUE)

> ore.ls()

[1] "DEMO_R_APPLY_RESULT" "DEMO_R_TABLE" "DEMO_SUBSET_TABLE"

[4] "INSUR_CUST_LTV_SAMPLE" "MINING_DATA_APPLY" "MINING_DATA_APPLY_V"

[7] "MINING_DATA_BUILD_V" "MINING_DATA_TEST_V" "MINING_DATA_TEXT_APPLY_V"

[10] "MINING_DATA_TEXT_BUILD_V" "MINING_DATA_TEXT_TEST_V"

> ore.disconnect()

NOTE: Some of the documentation seems to refer to the command is.ore.connected(). This generates and error and seems to be an error in some of the ORE documentation.

ore.ls()

The ore.ls() command is used to find out what objects you have in your schema. The objects that it will list are all the tables and views that are defined in the schema.

> ore.ls()

[1] "DEMO_R_APPLY_RESULT" "DEMO_R_TABLE" "DEMO_SUBSET_TABLE"

[4] "INSUR_CUST_LTV_SAMPLE" "MINING_DATA_APPLY" "MINING_DATA_APPLY_V"

[7] "MINING_DATA_BUILD_V" "MINING_DATA_TEST_V" "MINING_DATA_TEXT_APPLY_V"

[10] "MINING_DATA_TEXT_BUILD_V" "MINING_DATA_TEXT_TEST_V"

ore.sync

The ore.sync() command is used to synchronise the meta-data about the objects in the oracle schema with the ORE environment. When no parameters are used in the command then all the meta-data for the tables and views are synchronised.

> ore.sync()

There are a a few variants for this command. These can include a specified list of tables and specifying a schema. These become increasingly important as the number of objects (tables and views) increase in your schema. Realistically as your analytical environment grows so will the number of objects. Therefore it the length of time it takes the ore.sync() command to run will start to take longer and long. So instead of synchronising all of the object, you can only synchronising the objects that you need.

The following command synchronises the meta-data for the listed tables.

ore.sync(table = c("MINING_DATA_BUILD_V", "MINING_DATA_TEST_V", "INSUR_CUST_LTV_SAMPLE"))

If you want to synchronise objects from another schema you can specify the schema name. Only the objects that you have privileges on will be synchronised.

ore.sync("SH")

ore.attach

After you have run the ore.sync() command then you can add the objects that were synchronised to the search path of what R objects you can access and use. To do this you need to run the ore.attach() command.

> ore.sync()

> ore.attach()

The ORE objects are added at position 2. This means that they are listed after the local R workspace objects and before all previously attached packages and environment. You cannot change the position to be 1, but you can change it to 3

> ore.attach("DMUSER", 3)

NOTE: If you use the all=TRUE in your ore.connect command than the connection will automatically execute the ore.sync() and the ore.attach() commands.

ore.rm

The ore.rm() command can be used to remove an object from the R search space. This does not remove or delete the object from your ORE schema.

> ore.rm("MINING_DATA_BUILD_V")

Disconnecting & Other things

ore.disconnect

After you have finished all your ORE work you will need to disconnect from your schema. To do this you can use the ore.disconnect function. As part of the ore.disconnect function all temporary objects created during the session will be removed/deleted from the database / your schema. The ore.disconnect function does return a value. If you are unsure if the disconnect has worked then you can use the ore.is.connected command.

> ore.disconnect()

> ore.is.connected()

[1] TRUE

If you exit from your R session or application and implicit

ore.disconnect will be issued. But it is always good practice to issue this command yourself.

OREShowDoc()

The OREShowDoc() command will open up a web browser and will display the home page of the ORE documentation. This will be the ORE documentation on you machine. My test machine is a VM with ORE installed on it, so the home page for the will be based on ORE installed directories on the server. My laptop is a Mac and ORE is currently not supported on the Mac, so I was not able to test how this works on the client.

> OREShowDoc