Over the past 6-8 months I've been working on a project with the Veterinary School of Medicine, part of University of Dublin. The project was focused on using Machine Learning to find patterns in blood tests and x-rays from dogs who are are suffering with Irritable Bowel Syndrome (IBS) in dogs.

Over the past five years the Veterinary School of Medicine has built up a very larger data set of blood samples, x-rays, family history of the dog, eating habits of the dogs, etc.

This project has finally completed and it is only now that I can share with you some of the results and lessons we learned during the project.

The first part of the project was getting all this historical data loaded into and Oracle 12.2c database. This was a relatively simple task but this it did take a bit of coding to get the data model correct and to perform the necessary data transformations and integration needed, as illustrated in the following diagram.

Once the data was loaded into the database we could start using the in-database Machine Learning algorithms to find patterns that indicated if a dog was suffering from IBS, and ideally if they were in the early stages of IBS. The treatments for early detection had a higher success rate.

We began using the GUI Oracle Data Miner tool, part of SQL Developer, to build the predictive model workflows.

But the results we were getting was very disappointing. We were getting average predictive accuracy of 56% for all the models. This is not good and not much better than flipping a coin.

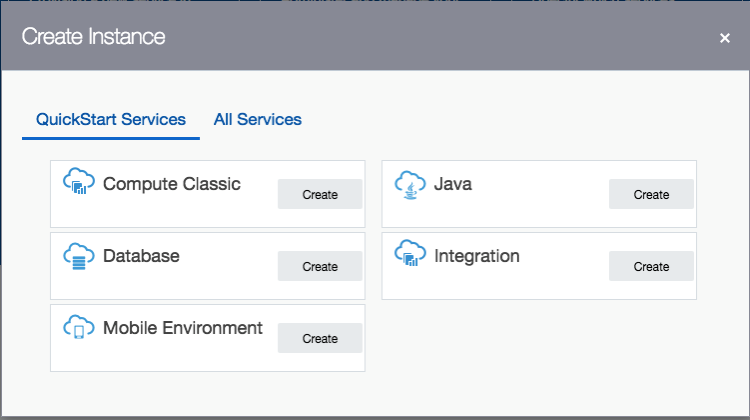

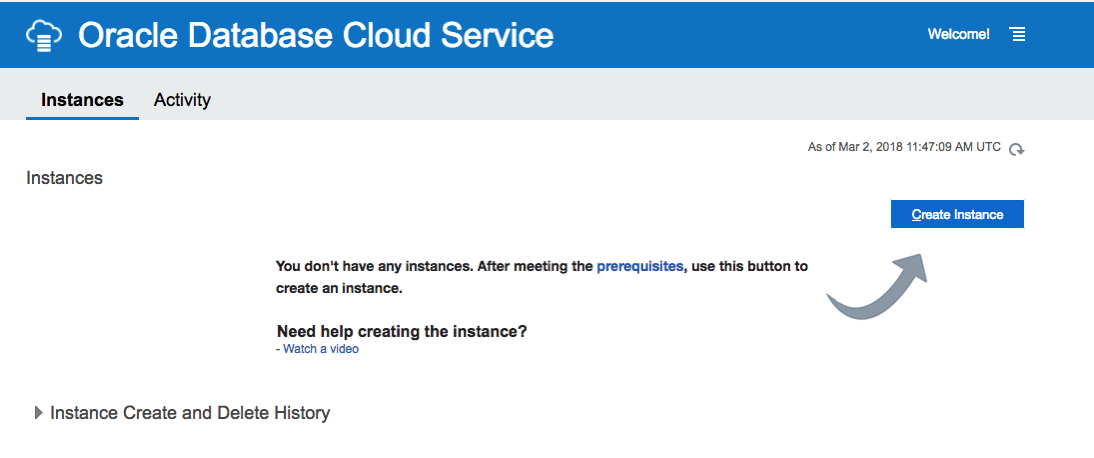

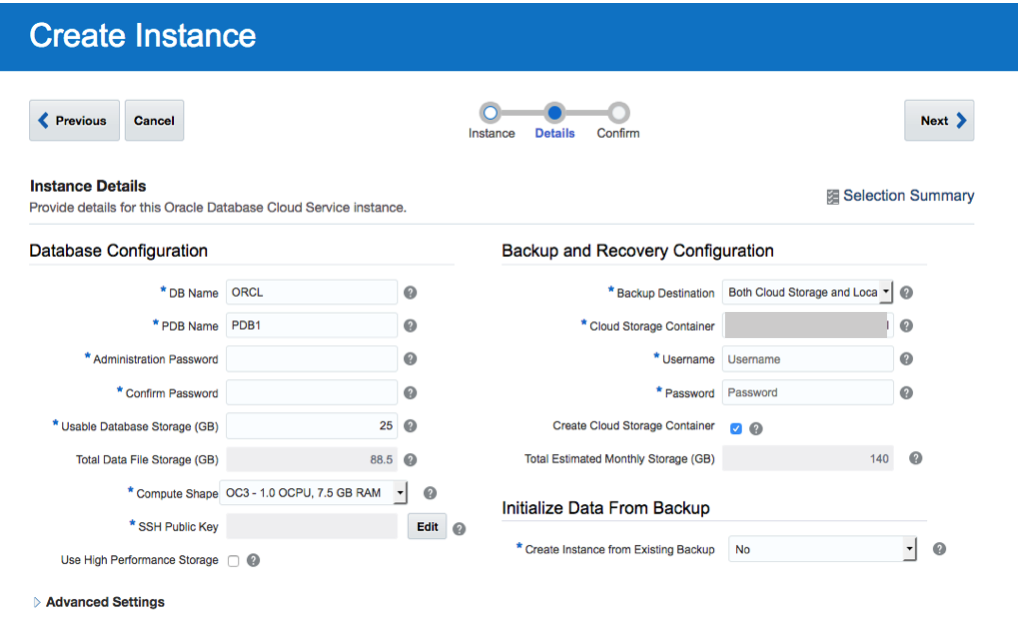

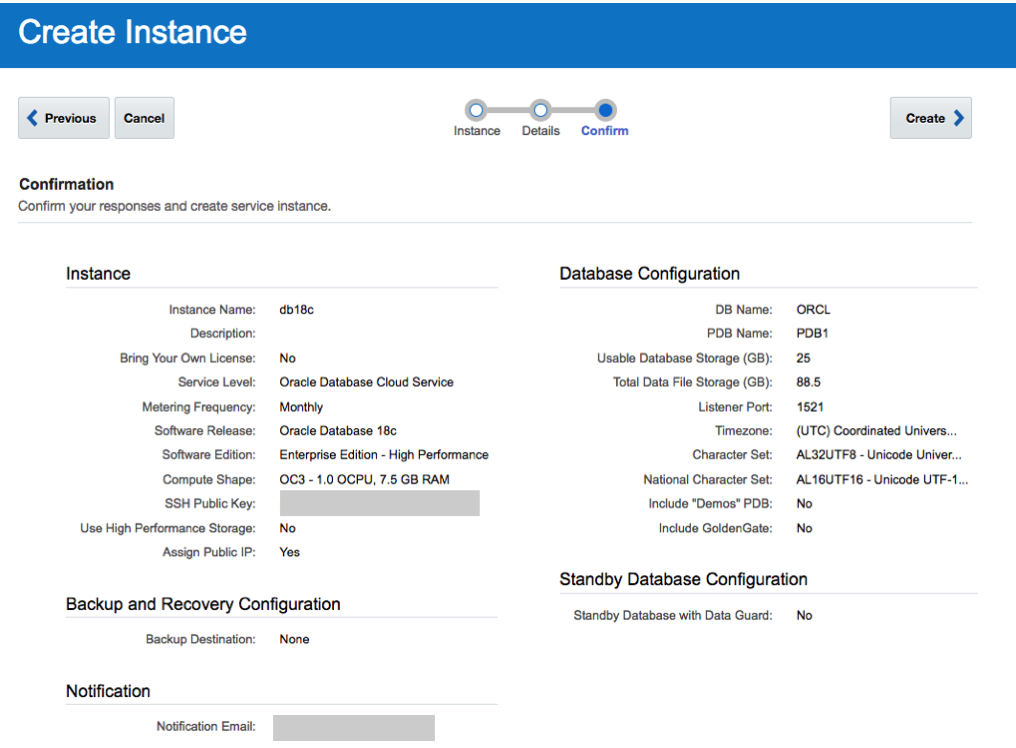

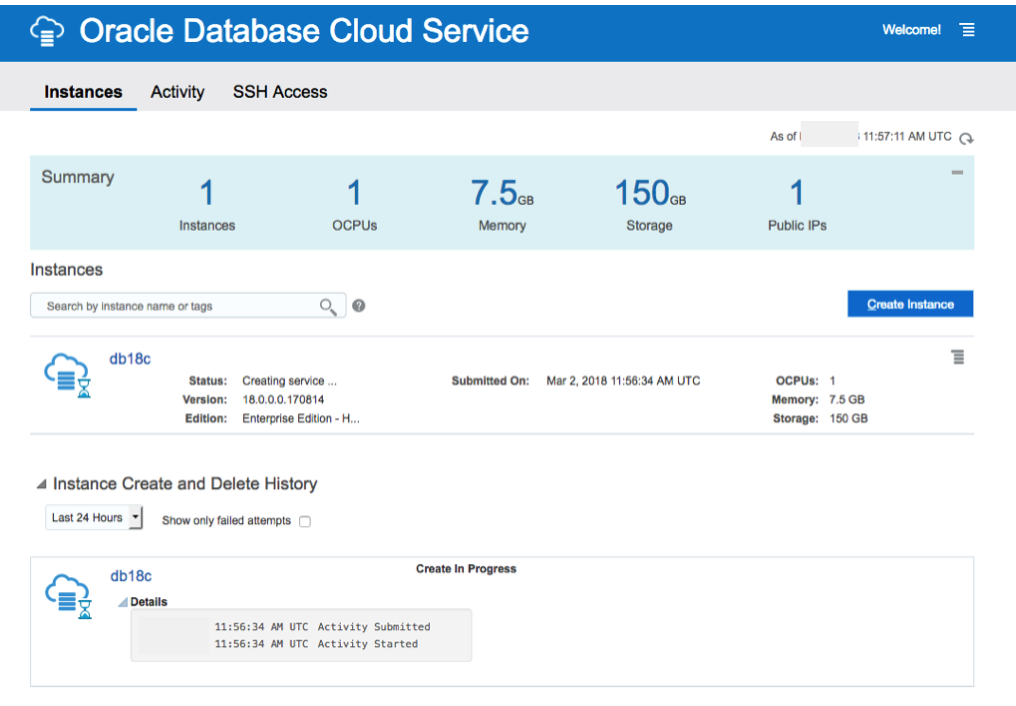

We were a bit stuck at this point about what to do next, and then Oracle's Cloud Engagement team heard about our troubles and suggested that we join the Oracle 18c beta. They got us setup and running with a beta Oracle 18c cloud instance in no time and within a couple of days we are generating new machine learning models.

Oracle 18c has a number of new features that Oracle thought would be useful to use. Firstly they had a new and improved Neural Networks algorithm that had better accuracy when working with images stored as BLOBs, plus there was a number of new SQL analytic functions. One particular function was TO_DOG_YEAR(). A year in a dogs life is not 365 days, and is dependent on the age of the dog as the length of a dog year changes with age and the breed of the dog. Some recent research indicates the geography location and origin of the dog also plays a part.

The syntax of this function is

TO_DOG_YEAR (

DOB DATE

FEMALE BOOLEAN

NLS_BREED STRING )

If you do a bit of googling you will find other blog posts that discuss this new function. Some of these can be found here and here.

Oracle has been working with veterinary schools around the world on this problem and hence the introduction of this new SQL analytic function in Oracle 18c. This function accepts the DOB of the dog (DATE), if the dog is Female, and the Breed of the dog. It then calculates the appropriate age of the dog down to the nearest day. We built this into our machine learning workflow, and were very surprised by the outcomes. The predictive accuracy of the models went from 56% to 93%. That is an amazing jump. Perhaps too amazing. But after a few days of extra validation we concluded the difference was down to the new TO_DOG_YEAR() function and the ability to so accurately calculate the age of the dog.

In the last few weeks we have noticed, after the latest Oracle 18c patch has automatically been applied, that this function now has an additional parameter, this is OWN_SMOKE, and seems to indicated if the dog is owned by someone who smokes. This will indeed affect the age of the animal. We having had a chance to try this new parameter yet, but hope to soon.

The following diagram shows the updated workflow along with the transformation node that uses the TO_DOG_YEAR() function.

If you do a bit of googling you will find lots of research by various veterinary schools around the world, who spend so much time researching the various aspects that apply to this calculation. It was this research and Oracle's involvement in previous research that resulted in the TO_DOG_YEAR() function being included in Oracle 18c.

A more detailed research paper going to be published in the International Journal of Veterinary Science and Medicine in June 2018 (Volume 6, Issue 1). This paper will explain in more details of the effects of age, breed and sex has on the accuracy of the machine learning models.

We have also been asked to submit our project to Oracle Open World, and it is currently been considered for early selection. This will allow OOW to include this project in their promotional material.

Update 2nd April

A little update for those who didn’t realize this was posted on 1st of April. It was an April Fool common idea from some Oracle Community buddies on the post-UKOUG_TECH17 trip. And what remains true all the year is how this community is full of awesome people. Check out some of the other posts.

Franck Pachot: After IoT, IoP makes its way to the database

Martin Berger: tested performance

Pieter Van Puymbroeck: realized it was offloaded in Exadata

Øyvind Isene: provides a way to test it with a cloud discount

Connor McDonald/AskTom: yes they also joined in with the fun

<

<

I've been selected to give one of these talks, and I've given this talk at some live Oracle Code events and at JavaOne back in October.

The present is pre-recorded and I recorded this video back in September.

I hope to be online at the end of some of these presentations to answer any questions, but unfortunately due to changes with my work commitments I may not be able to be online for all of them.

The moderator for these events will take your questions (or you can send them to me here) and I will write a blog post answering all your questions.

I've been selected to give one of these talks, and I've given this talk at some live Oracle Code events and at JavaOne back in October.

The present is pre-recorded and I recorded this video back in September.

I hope to be online at the end of some of these presentations to answer any questions, but unfortunately due to changes with my work commitments I may not be able to be online for all of them.

The moderator for these events will take your questions (or you can send them to me here) and I will write a blog post answering all your questions.