In a previous blog post I introduced HiveMall as a SQL based machine learning language available for Hadoop and integrated with Hive.

If you have your own Hadoop/Big Data environment, I provided the installation instructions for Hivemall, in that blog post

An alternative is to use Docker. There is a HiveMall Docker image available. A little warning before using this image. It isn't updated with the latest release but seems to get updated twice a year. Although you may not be running the latest version of HiveMall, you will have a working environment that will have almost all the functionality, bar a few minor new features and bug fixes.

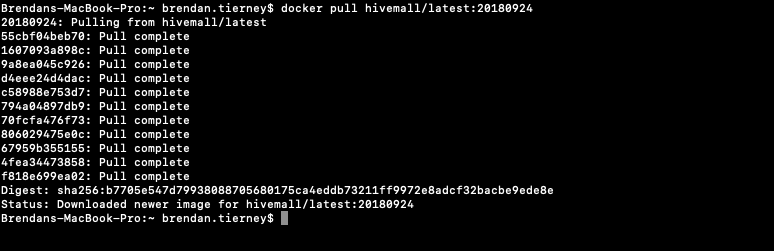

To get started, you need to make sure you have Docker running on your machine and you have logged into your account. The docker image is available from Docker Hub. Take note of the version number for the latest version of the docker image. In this example it is 20180924

Open a terminal window and run the following command. This will download and extract all the image files.

docker pull hivemall/latest:20180924

Until everything is completed.

Until everything is completed.

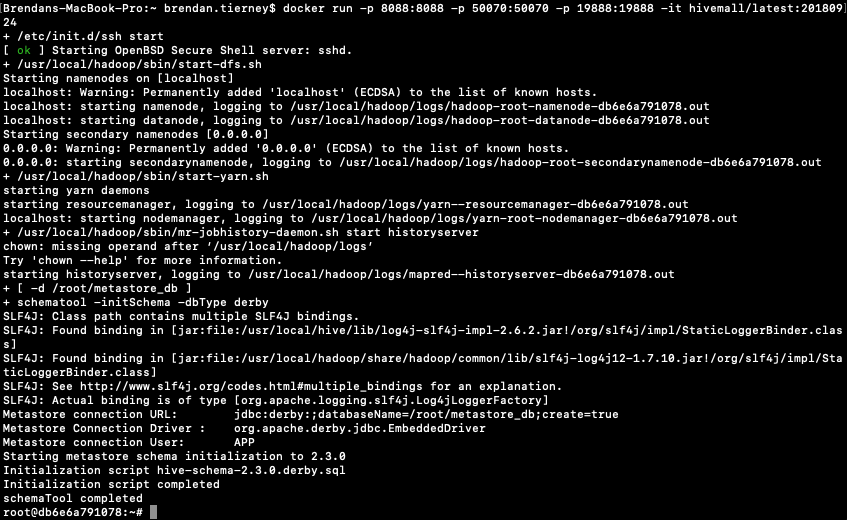

This docker image has HDFS, Yarn and MapReduce installed and running. This will require the exposing of the ports for these services 8088, 50070 and 19888.

To start the HiveMall docker image run

This docker image has HDFS, Yarn and MapReduce installed and running. This will require the exposing of the ports for these services 8088, 50070 and 19888.

To start the HiveMall docker image rundocker run -p 8088:8088 -p 50070:50070 -p 19888:19888 -it hivemall/latest:20180924Consider creating a shell script for this, to make it easier each time you want to run the image.

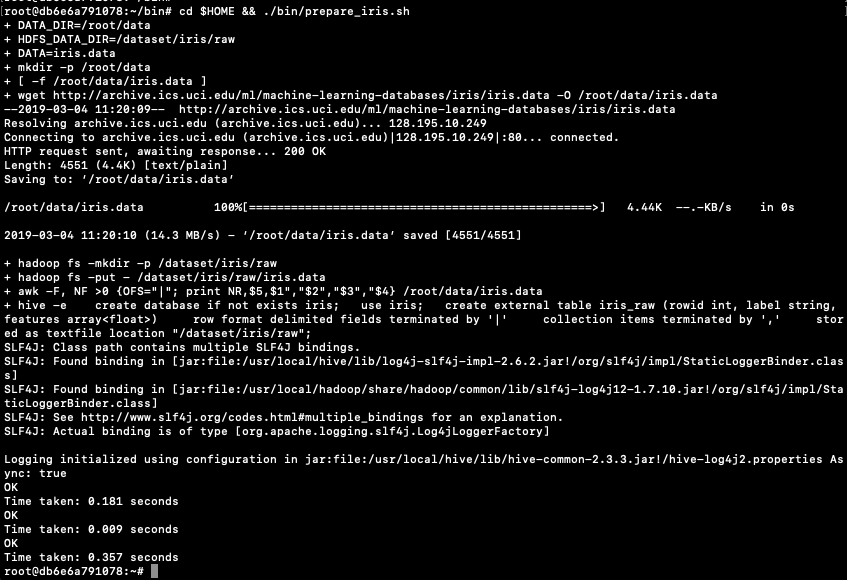

Now seed Hive with some data. The typical example uses the IRIS data set. Run the following command to do this. This script downloads the IRIS data set, creates a number directories and then creates an external table, in Hive, to point to the IRIS data set.

cd $HOME && ./bin/prepare_iris.sh

Now open Hive and list the databases.

hive -S hive> show databases; OK default iris Time taken: 0.131 seconds, Fetched: 2 row(s)

Connect to the IRIS database and list the tables within it.

hive> use iris; hive> show tables; iris_raw

Now query the data (150 records)

hive> select * from iris_raw; 1 Iris-setosa [5.1,3.5,1.4,0.2] 2 Iris-setosa [4.9,3.0,1.4,0.2] 3 Iris-setosa [4.7,3.2,1.3,0.2] 4 Iris-setosa [4.6,3.1,1.5,0.2] 5 Iris-setosa [5.0,3.6,1.4,0.2] 6 Iris-setosa [5.4,3.9,1.7,0.4] 7 Iris-setosa [4.6,3.4,1.4,0.3] 8 Iris-setosa [5.0,3.4,1.5,0.2] 9 Iris-setosa [4.4,2.9,1.4,0.2] 10 Iris-setosa [4.9,3.1,1.5,0.1] 11 Iris-setosa [5.4,3.7,1.5,0.2] 12 Iris-setosa [4.8,3.4,1.6,0.2] 13 Iris-setosa [4.8,3.0,1.4,0.1 ...

Find the min and max values for each feature.

hive> select

> min(features[0]), max(features[0]),

> min(features[1]), max(features[1]),

> min(features[2]), max(features[2]),

> min(features[3]), max(features[3])

> from

> iris_raw;

4.3 7.9 2.0 4.4 1.0 6.9 0.1 2.5

You are now up and running with HiveMall on Docker.